At the Mercy of the Scanout

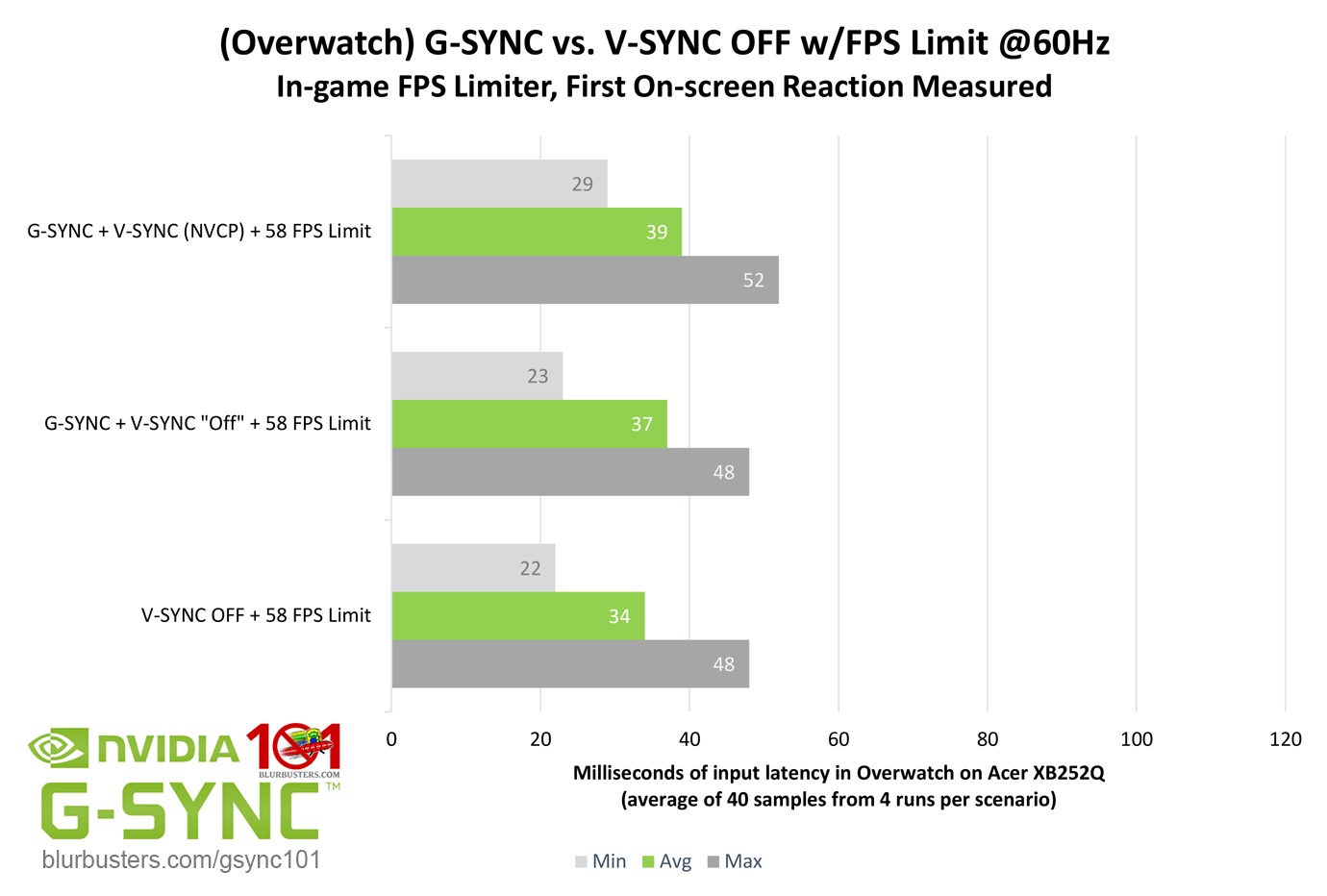

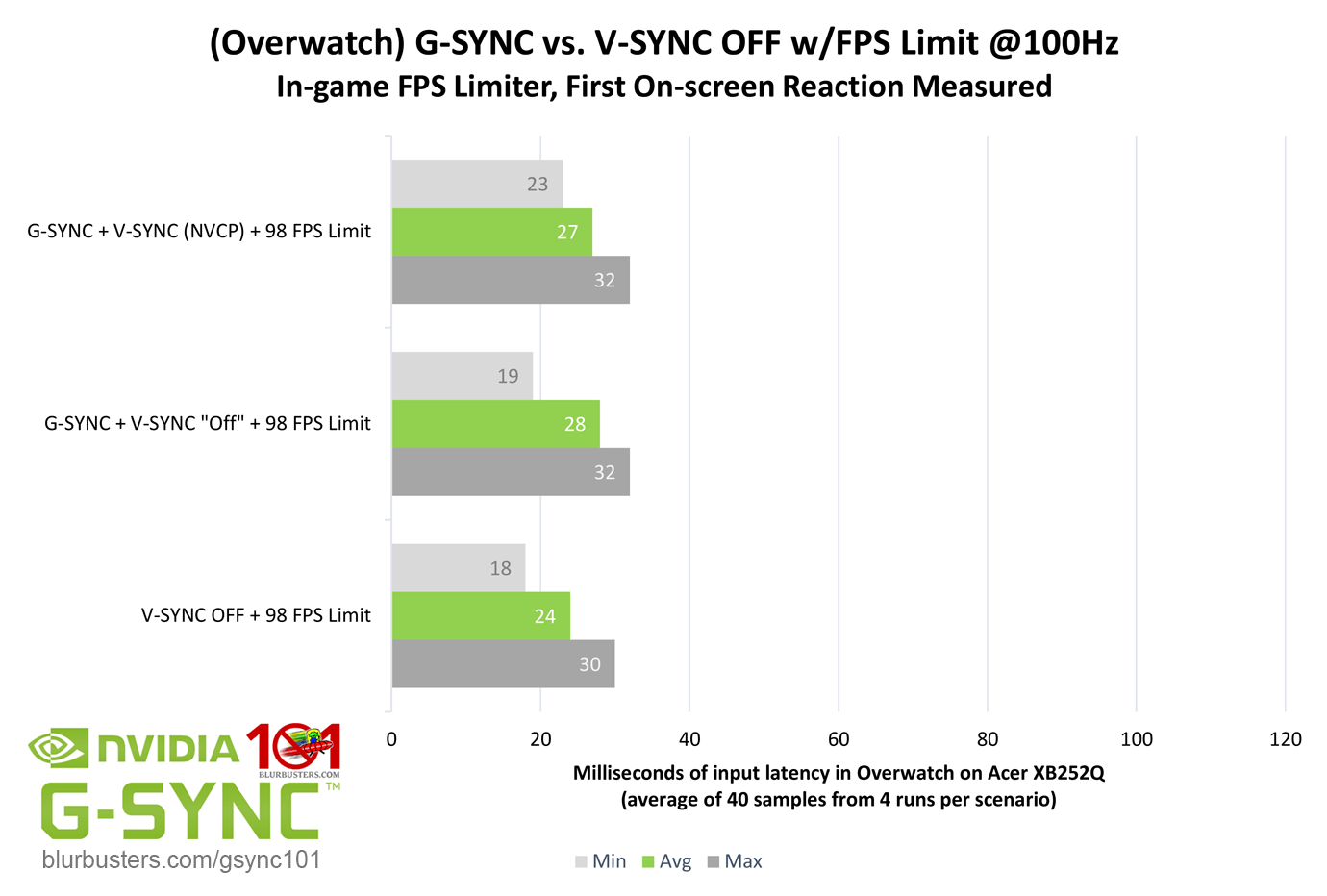

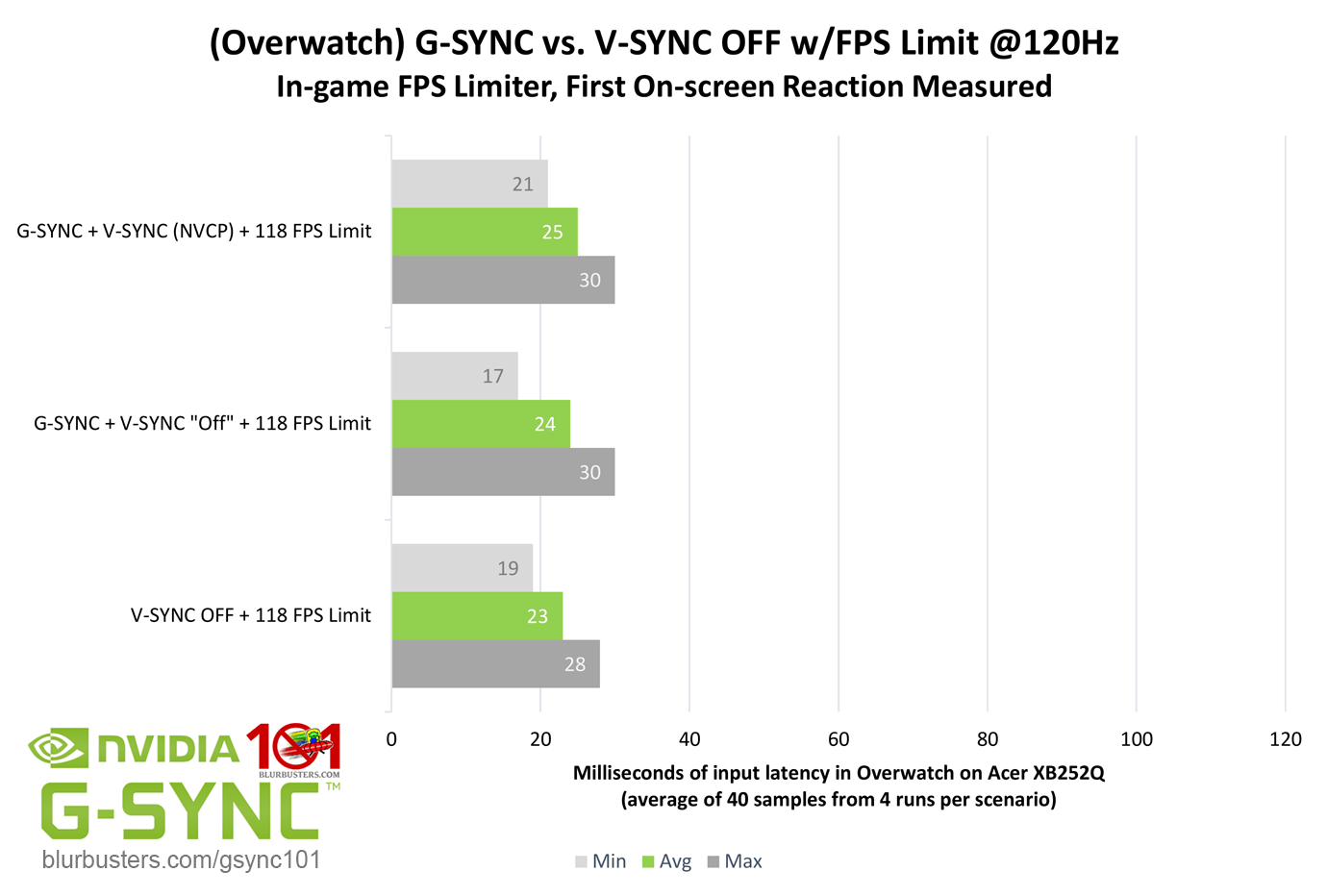

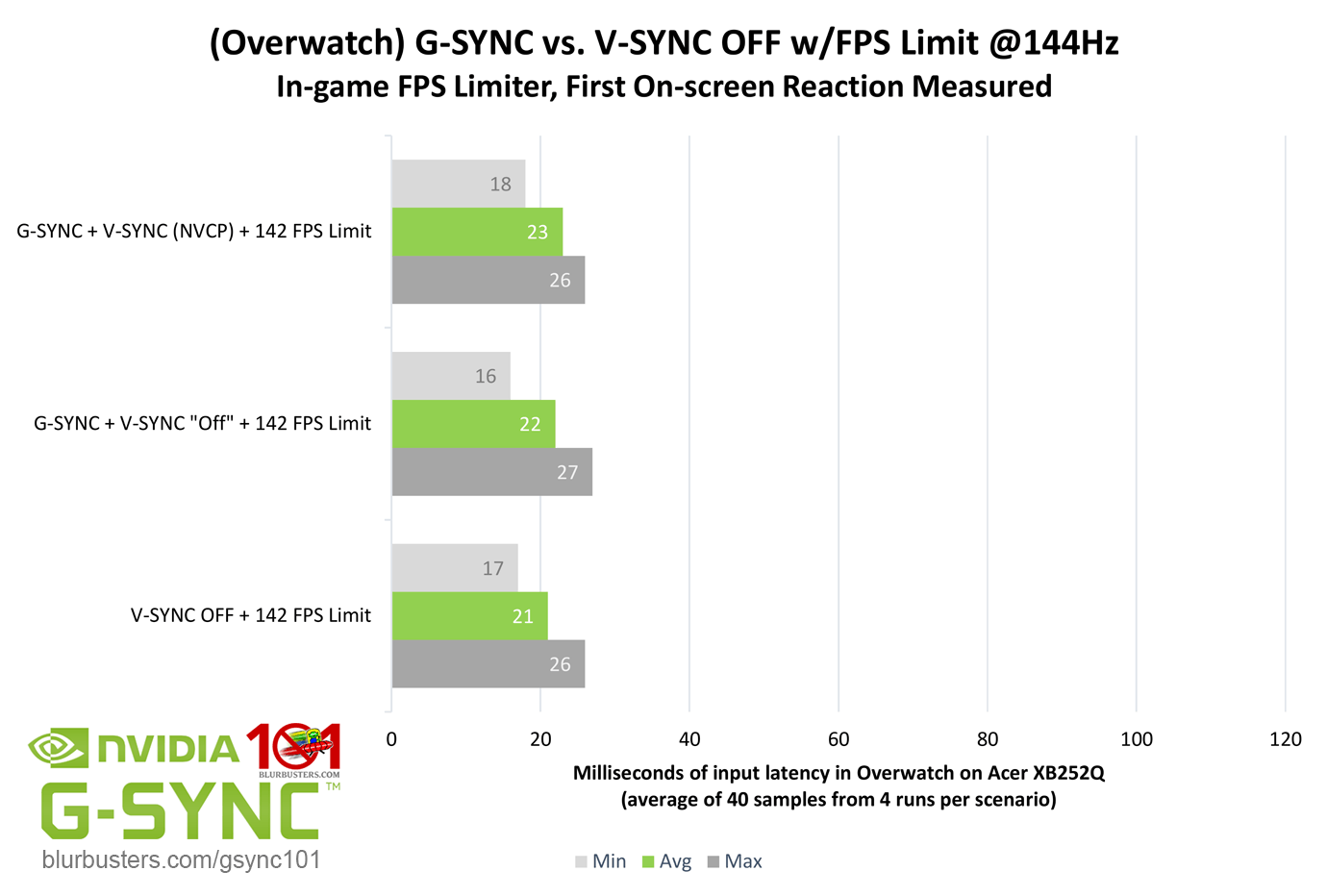

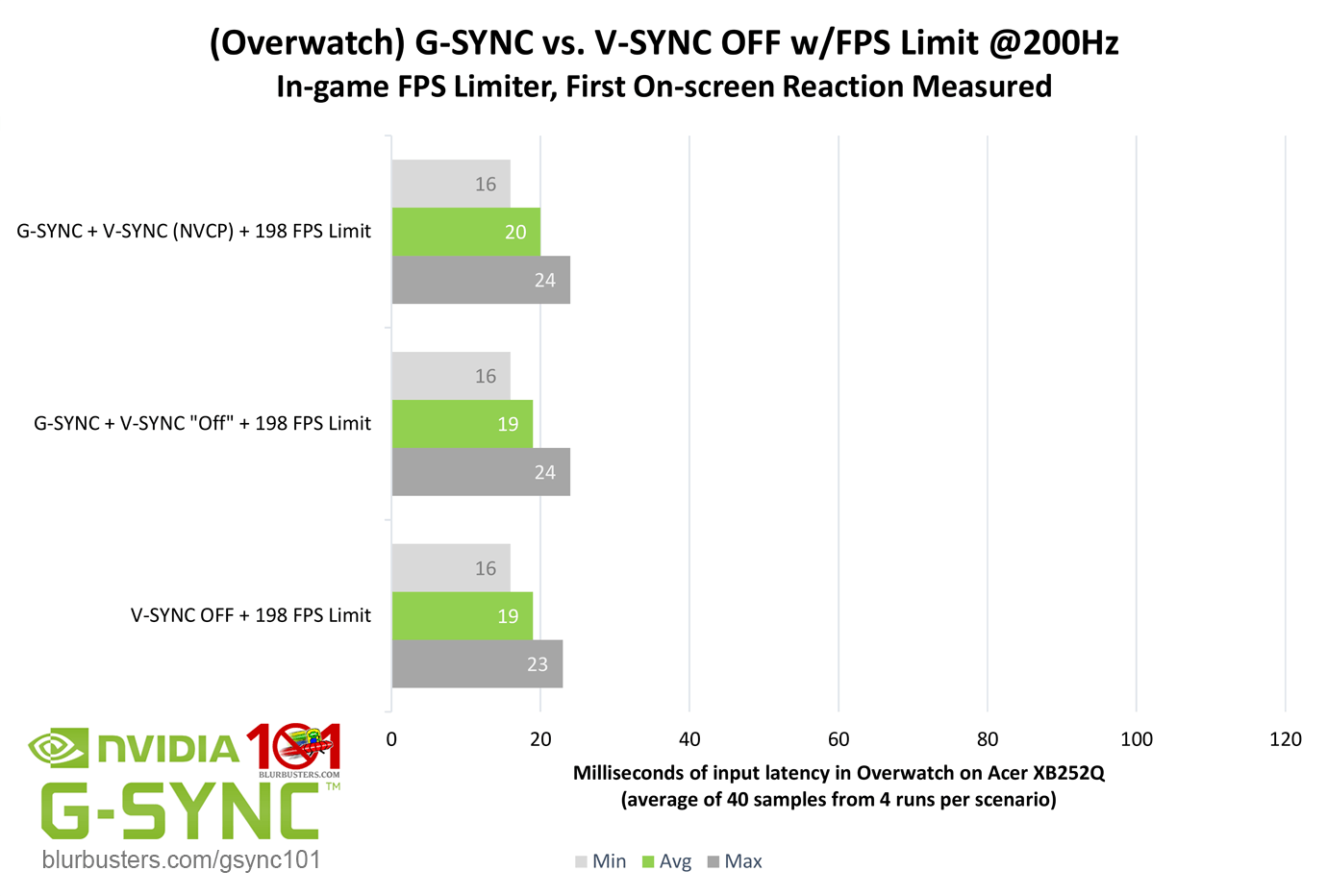

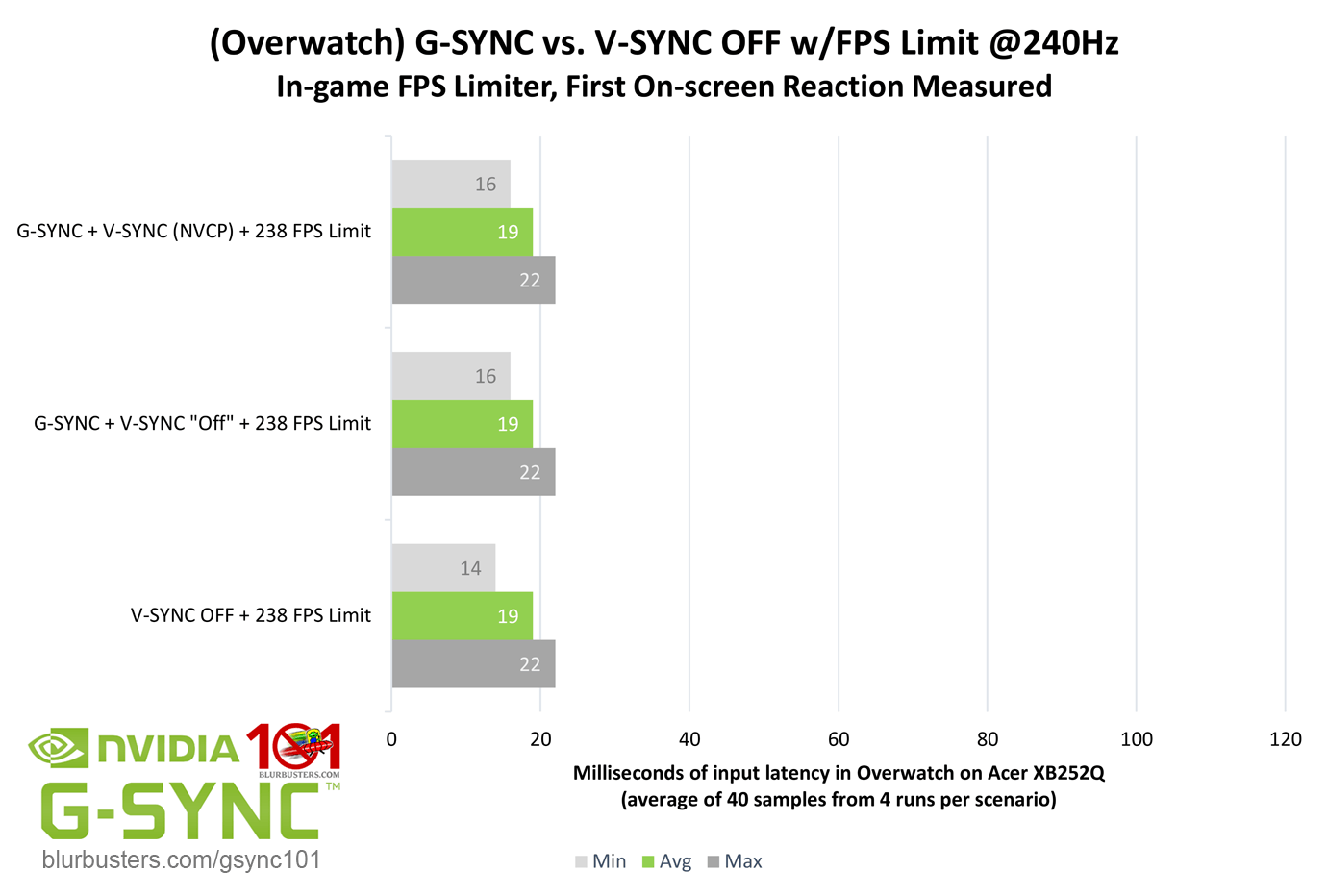

Now that the FPS limit required for G-SYNC to avoid V-SYNC-level input lag has been established, how does G-SYNC + V-SYNC and G-SYNC + V-SYNC “Off” compare to V-SYNC OFF at the same framerate?

The results show a consistent difference between the three methods across most refresh rates (240Hz is nearly equalized in any scenario), with V-SYNC OFF (G-SYNC + V-SYNC “Off,” to a lesser degree) appearing to have a slight edge over G-SYNC + V-SYNC. Why? The answer is tearing…

With any vertical synchronization method, the delivery speed of a single, tear-free frame (barring unrelated frame delay caused by many other factors) is ultimately limited by the scanout. As mentioned in G-SYNC 101: Range, The “scanout” is the total time it takes a single frame to be physically drawn, pixel by pixel, left to right, top to bottom on-screen.

With a fixed refresh rate display, both the refresh rate and scanout remain fixed at their maximum, regardless of framerate. With G-SYNC, the refresh rate is matched to the framerate, and while the scanout speed remains fixed, the refresh rate controls how many times the scanout is repeated per second (60 times at 60 FPS/60Hz, 45 times at 45 fps/45Hz, etc), along with the duration of the vertical blanking interval (the span between the previous and next frame scan), where G-SYNC calculates and performs all overdrive and synchronization adjustments from frame to frame.

The scanout speed itself, both on a fixed refresh rate and variable refresh rate display, is dictated by the current maximum refresh rate of the display:

V-SYNC OFF can defeat the scanout by starting the scan of the next frame(s) within the previous frame’s scanout anywhere on screen, and at any given time:

This results in simultaneous delivery of more than one frame scan in a single scanout (tearing), but also a reduction in input lag; the amount of which is dictated by the positioning and number of tearline(s), which is further dictated by the refresh rate/sustained framerate ratio (more on this later).

As noted in G-SYNC 101: Range, G-SYNC + VSYNC “Off” (a.k.a. Adaptive G-SYNC) can have a slight input lag reduction over G-SYNC + V-SYNC as well, since it will opt for tearing instead of aligning the next frame scan to the next scanout when sudden frametime variances occur.

To eliminate tearing, G-SYNC + VSYNC is limited to completing a single frame scan per scanout, and it must follow the scanout from top to bottom, without exception. On paper, this can give the impression that G-SYNC + V-SYNC has an increase in latency over the other two methods. However, the delivery of a single, complete frame with G-SYNC + V-SYNC is actually the lowest possible, or neutral speed, and the advantage seen with V-SYNC OFF is the negative reduction in delivery speed, due to its ability to defeat the scanout.

Bottom-line, within its range, G-SYNC + V-SYNC delivers single, tear-free frames to the display the fastest the scanout allows; any faster, and tearing would be introduced.