Assembly of multiple parts plays a critical role across nearly every major industry such as manufacturing, automotive, aerospace, electronics, and medical devices. Despite its widespread use, robotic assembly continues to be a significant challenge. It involves complex interactions where robots must manipulate objects through continuous physical contact, requiring a high level of precision and accuracy. Today’s robotic assembly systems have long been constrained by fixed automation. These systems are built for specific tasks that demand heavy human engineering to design and deploy, limiting their adaptability and scalability.

The future of robotic assembly lies in flexible automation. Tomorrow’s robots must rapidly adapt to new parts, poses, and environments. Integrating robotics with simulation and AI can break through these limitations. NVIDIA has spent several years advancing research in this area, and our ongoing partnership with Universal Robots (UR) is driving this transformation from research innovations into real-world industrial applications.

In this post, we showcase the zero-shot sim-to-real transfer of a gear assembly task on the UR10e robot. The task was designed and trained in NVIDIA Isaac Lab and deployed using NVIDIA Isaac ROS and the UR10e low-level torque interface. Our goal is to enable anyone to replicate this work and use Isaac Lab and Isaac ROS for their own sim-to-real applications.

Isaac Lab is an open-source, modular training framework for robot learning. Isaac ROS, built on the open-source ROS 2 software framework, is a collection of accelerated computing packages and AI models, bringing NVIDIA-acceleration to ROS developers everywhere. It offers ready-to-use packages for common tasks like navigation and perception.

Contact-rich simulation in Isaac Lab

Isaac Lab makes contact-rich simulation feasible by enabling accurate physics and large-scale reinforcement learning (RL), or learning through trial and error, across thousands of parallel environments. What was once computationally intractable, like simulating complex interactions, is now possible.

Isaac Lab includes challenging industrial tasks from Factory, such as peg insertion, gear meshing, and nut-bolt fastening. Isaac Lab supports both imitation learning (mimicking demonstration data) and RL, providing flexibility in training approaches for any robot embodiment.

RL is a powerful and effective technique for assembly problems, since it does not require human demonstrations and can be very robust to errors in perception, control, and fixturing. However, the reality gap—differences between simulation and the real world—remains a key challenge. The workflow featured in this post bridges this gap using concepts from IndustReal, a set of algorithms and tools that enables robust RL-trained skills that transfer from simulation to real-world robots for complex assembly tasks.

Training the gear assembly task in Isaac Lab

The gear assembly task involves perceiving, grasping, transporting, and inserting all the gears onto their corresponding shafts. Figure 2 shows different states of the task, from the initial state to the goal state for one gear. This requires three core skills: grasp generation, free-space motion generation, and insertion.

Grasp generation uses an off-the-shelf grasp planner to obtain feasible grasp poses for the parts. Motion generation and insertion use RL to learn policies. While motion generation for robot arms is well-established and can be achieved using classical trajectory planners, training an RL-based motion generation policy provides a useful calibration and debugging step of the policy learning framework before tackling the more challenging insertion task.

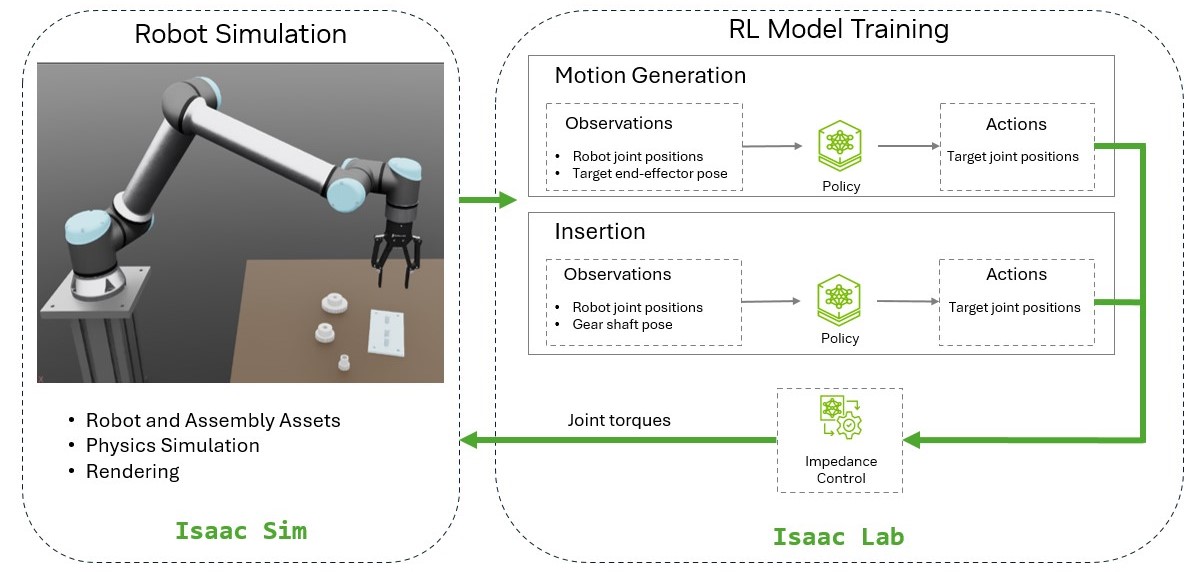

Figure 3 shows the policy learning pipeline for motion generation and insertion tasks using Isaac Sim and Isaac Lab. For each task, assets and scene specifications define the simulation environment in Isaac Sim, while Isaac Lab provides the training environment. The policies for both tasks rely on a low-level impedance controller implemented in Isaac Lab.

The motion generation and insertion skills are each formulated as separate reinforcement learning problems, learned independently as illustrated in Figure 3.

- Motion generation: The goal is for the robot, starting at random initial joint angles within the specified robot workspace, to move its end-effector to a specified target pose (a grasp pose, for example). Observations include the robot’s joint positions and the target end-effector pose, while the action space consists of the joint position targets. The reward function minimizes the distance between the end-effector and the target with penalties for abrupt or aggressive robot motions.

- Insertion: The gear is initialized in the robot’s gripper, positioned at a randomly sampled pose near the target shaft. The goal is to move the gear to the base of the shaft. Observations include the robot’s joint positions and the target shaft pose, while the action space consists of the joint position targets. The reward function minimizes the distance between the gear and the goal, with penalties again for abrupt or aggressive robot motions.

For both skills, the joint position targets are generated at 60 Hz, which are executed by a lower-level impedance controller.

The agent was trained with a diverse set of random configurations, such as varying initial robot arm poses, poses of the gear in the gripper, gear sizes, and stages of the overall task (no gears inserted yet, or some gears inserted, for example). To facilitate sim-to-real transfer, domain randomization was applied to the robot dynamics (joint friction and damping) and controller gains, as well as policy observation noise. Training occurs across parallel environments to enable the agent to have diverse and valuable experiences that are essential for effectively learning the task.

Network architecture and RL algorithm details

Each policy is structured as a Long Short-Term Memory (LSTM) network with 256 units, followed by a multilayer perceptron (MLP) with three layers containing 256, 128, and 64 neurons, respectively. The policies were trained using the Proximal Policy Optimization (PPO) algorithm from the rl-games library. The training was done in Isaac Sim 4.5 and Isaac Lab 2.1 on an NVIDIA RTX 4090 GPU.

Results

Figure 4 shows the trained policies in action on the UR10e robot, motion generation on the left and insertion on the right.

Figure 4. Trained policies tested on UR10e robot in Isaac Lab simulation motion generation (left) and insertion (right)

Figure 5 demonstrates the combinations of the learned skills. Using the learned skills, the robot is able to assemble three gears placed at random positions by repeating the grasp planner and both learned skills. The policies are robust to the sequence in which the gears are assembled and the initial poses of the gears.

Figure 5. The trained RL policies are sequenced to perform multiple robotic assembly steps in a loop: move to gear 1 → grasp → insert → move to gear 2 → grasp → insert, and so on

UR torque control interface enabling sim-to-real transfer

This work employs impedance control, a technique that allows robots to have safe and compliant (soft) interactions with objects. While position controllers offer high accuracy, their rigidity can limit adaptability due to perception or real-world alignment challenges, ultimately causing large unintended forces. Impedance control offers a more flexible alternative.

Unlike the stiff position controllers common in industrial robots, impedance control requires direct torque commands, which is not common for industrial robots. UR is now providing early access to their direct torque control interface to enable this type of control.

In collaboration with UR, the team at NVIDIA trained policies using Isaac Lab and methods from the paper, IndustReal: Transferring Contact-Rich Assembly Tasks from Simulation to Reality and deployed the policies using Isaac ROS and the UR torque interface on a UR10e robot. Both the Segment Anything and FoundationPose packages from Isaac ROS were used.

Sim-to-real transfer on UR10e using Isaac ROS and UR torque interface

Figure 6 shows the sim-to-real transfer framework used in deploying the trained policy from Isaac Lab. The workflow starts with a perception pipeline with an RGB image fed into Segment Anything, which outputs a segmentation mask. This mask, combined with the depth image, is then passed to FoundationPose to estimate the 6D poses of the gear plugs.

The gear poses and the joint positions from UR joint encoders form the observations that are passed to the policy to predict the delta joint positions. The delta joint positions are converted into absolute target joint positions and serve as an input to the impedance controller. The custom lower-level impedance controller made in URScript, operates at 500 Hz and computes the joint torques required to control the UR robot in performing the task. Results are shown in Video 1.

Get started

This post showcased the zero-shot sim-to-real transfer of a gear assembly task on the UR10e robot. The task was designed and trained in NVIDIA Isaac Lab and deployed using NVIDIA Isaac ROS and the UR10e low-level torque interface.

Ready to get started developing your own contact-rich manipulation policies for robotic assembly tasks? Check out these resources:

- Download Isaac Sim and Isaac Lab to explore training contact-rich environments.

- Learn more about the algorithms featured in this post, see IndustReal: Transferring Contact-Rich Assembly Tasks from Simulation to Reality and Transferring Industrial Robot Assembly Tasks from Simulation to Reality.

- Reference the Isaac ROS documentation.

- Download the early access robot software from UR with the Direct Torque Command.

- Read the NVIDIA Robotics Research and Development Digest (R²D²) for more about the breakthroughs in contact-rich manipulation for robotic assembly.

Stay tuned for Isaac Lab environments and training code, enabling anyone to test these policies and train their own policies, and a reference workflow that enables you to deploy these policies on your own UR robots.

Stay up to date by subscribing to our newsletter and following NVIDIA Robotics on LinkedIn, Instagram, X, and Facebook. Explore NVIDIA documentation and YouTube channels, and join the NVIDIA Developer Robotics forum. To start your robotics journey, enroll in our free NVIDIA Robotics Fundamentals courses today.

Get started with NVIDIA Isaac libraries and AI models for developing physical AI systems.

Acknowledgments

We would like to acknowledge Yashraj Narang and Buck Babich for advising the development of the UR sim-to-real demonstration. We thank Rune Søe-Knudsen at Universal Robots for providing technical support with the newly-developed direct torque control interface. We also thank Bingjie Tang, Michael A. Lin, Iretiayo Akinola, Ankur Handa, Gaurav S. Sukhatme, Fabio Ramos, Dieter Fox, and Yashraj Narang for leading the IndustReal paper upon which the demonstration is based.