Until the 2000s vacuum tubes practically ruled the roost. Even if they had surrendered practically fully to semiconductor technology like integrated circuits, there was no escaping them in everything from displays to video cameras. Until CMOS sensor technology became practical, proper video cameras used video camera tubes and well into the 2000s you’d generally scoff at those newfangled LC displays as they couldn’t capture the image quality of a decent CRT TV or monitor.

For a while it seemed that LCDs might indeed be just a flash in the pan, as it saw itself competing not just with old-school CRTs, but also its purported successors in the form of SED and FED in particular, while plasma TVs made home cinema go nuts for a long while with sizes, fast response times and black levels worth their high sale prices.

We all know now that LCDs survived, along with the newcomer in OLED displays, but despite this CRTs do not feel like something we truly left behind. Along with a retro computing revival, there’s an increasing level of interest in old-school CRTs to the point where people are actively prowling for used CRTs and the discontent with LCDs and OLED is clear with people longing for futuristic technologies like MicroLED and QD displays to fix all that’s wrong with today’s displays.

Could the return of CRTs be nigh in some kind of format?

What We Have Lost

As anyone who was around during the change from CRT TVs to ‘flat screen’ LCD TVs can attest to, this newfangled display technology came with a lot of negatives. Sure, that 21″ LCD TV or monitor no longer required a small galaxy of space behind the display on the desk or stand, nor did it require at least two people to transport it safely, nor was the monitor on your desk the favorite crispy warm napping spot of your cat.

The negatives mostly came in the form of the terrible image quality. Although active matrix technology fixed the smearing and extreme ghosting of early LC displays at higher refresh rates, you still had multi-millisecond response times compared to the sub-millisecond response time of CRTs, absolutely no concept of blacks and often horrendous backlight bleeding and off-angle visual quality including image inverting with TN-based LCD panels. This is due to how the stack of filters that make up an LC display manipulate the light, with off-angle viewing disrupting the effect.

Meanwhile, CRTs are capable of OLED-like perfect blacks due to phosphor being self-luminous and thus requiring no backlight. This is a feat that OLED tries to replicate, but with its own range of issues and workarounds, not to mention the limited lifespan of the organic light-emitting diodes that make up its pixels, and their relatively low brightness that e.g. LG tries to compensate for with a bright white sub-pixel in their WOLED technology.

Even so, OLED displays will get dimmer much faster than the phosphor layer of CRTs, making OLED displays relatively fragile. The ongoing RTINGS longevity test is a good study case of a wide range of LCD and OLED TVs here, with the pixel and panel refresh features on OLEDs turning out to be extremely important to even out the wear.

CRTs are also capable of syncing to a range of resolutions without scaling, as CRTs do not have a native resolution, merely a maximum dot pitch for their phosphor layer beyond which details cannot be resolved any more. The change to a fixed native resolution with LCDs meant that subpixel rendering technologies like Microsoft’s ClearType became crucial.

To this day LCDs are still pretty bad at off-angle performance, meaning that you have to look at a larger LCD from pretty close to forty-five degrees from the center line to not notice color saturation and brightness shifts. While per-pixel response times have come down to more reasonable levels, much of this is due to LCD overdriving, which tries to compensate for ghosting by using higher voltages for the pixel transitions, but can lead to overshoot and a nasty corona effect, as well as reduce the panel’s lifespan.

Both OLEDs and LCDs suffer from persistence blurring even when their pixel-response times should be fast enough to keep up with a CRT’s phosphors. One current workaround is to insert a black frame (BFI) which can be done in a variety of ways, including strobing the backlight on LCDs, but this is just one of many motion blur reduction workarounds.

As noted by the Blur Busters article, some of these blur reduction approaches work better than others, with issues like strobe crosstalk generally still being present, yet hopefully not too noticeably.

In short, modern LCDs and OLED displays are still really quite bad by a number of objective metrics compared to CRTs, making it little wonder that there’s a strong hankering for something new, along with blatant nostalgia for plasma and CRT technology, flawed as they are. That said, we live in 2025 and thus do not have to be constrained by the technological limitations of 1950s pre-semiconductor vacuum tube technology.

The SED Future

One major issue with CRTs is hard to ignore, no matter how rose-tinted your nostalgia glasses are. Walking into an electronics store back in the olden days with a wall of CRT TVs on display you’re hit by both the high-pitched squeal from the high-voltage flyback converters and the depth of these absolute units. While these days you got flat panel TVs expanding into every larger display sizes, CRT TVs were always held back by the triple electron gun setup. These generate the electrons which are subsequently magnetically guided to the bit of phosphor that they’re supposed to accelerate into.

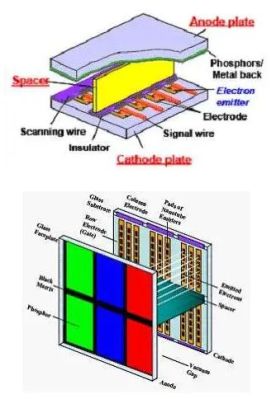

Making such CRTs flat can be done to some extent by getting creative with said guidance, but with major compromises like divergence and you’ll never get a real flat panel. This dilemma led to the concept of replacing the glass tube and small number of electron guns with semiconductor or carbon-nanotube electron emitters. Placed practically right on top of the phosphor layer, each sub-pixel could have its own miniscule electron gun this way, with the whole setup being reminiscent of plasma displays in many ways, just thinner, less power-hungry and presumably cheaper.

Canon began research on Surface-conduction Electron-Emitter Display (SED) technology in 1986 as a potential successor to CRT technology. This was joined in 1991 by a similar ‘ThinCRT’ effort that used field emission, which evolved into Sony’s FED take on the very similar SED technology. Although both display technologies are rather similar, they have a very different emitter structure, which affects the way they are integrated and operated.

Both of them have in common that they can be very thin, with the thickness determined by the thickness of the cathode plate – featuring the emitters – combined with that of the anode and the vacuum space in between. As mentioned in the review article by Fink et al. from 2007, the vacuum gap at the time was 1.7 mm for a 36″ SED-type display, with spacers inside this vacuum providing the structural support against the external atmosphere not wanting said vacuum to exist there any more.

This aspect is similar to CRTs and vacuum fluorescent displays (VFDs), though one requirement with both SED and FED is to have a much better vacuum than in CRTs due to the far smaller tolerances. While in CRTs it was accepted that the imperfect vacuum would create ions in addition to electrons, this molecule-sized issue did necessitate the integration of so-called ion traps in CRTs prior to aluminized CRT faces, but this is not an option with these new display types.

For SEDs and FEDs there is fortunately a solution to maintain a pure vacuum through the use of so-called getters, which is a reactive material that reacts with gas molecules to remove them from the vacuum gap. With all of this in place and the unit sealed, the required driving voltage for SED at the time was about 20V compared to 50-100V for FED, which is still far below the kilovolt-level driving voltage for CRTs.

A Tenuous Revival

Both the companies behind SED and Sony decided to spin down their R&D on this new take on the veritable CRT, as LCDs were surging into the market. As consumers discovered that they could now get 32+” TVs without having to check the load-bearing capacity of their floor or resorting to the debauchery of CRT (rear) projectors, the fact that LCD TVs weren’t such visual marvels was a mere trifle compared to the fact that TVs were now wall-mountable.

Even as image quality connoisseurs flocked first to plasma and then OLED displays, the exploding market for LCDs crowded out alternatives. During the 2010s you’d find CRTs discarded alongside once prized plasma TVs, either given away for practically free or scrapped by the thousands. Then came the retro gaming revival, which is currently sending the used CRT market skyrocketing, and which is leading us to ask major questions about where the display market is heading.

Although CRTs never really went away from a manufacturing point of view, it’s mostly through specialized manufacturers like Thomas Electronics who will fulfill your CRT fix, though on a strict ‘contact us for a quote’ basis. Restarting a mass-manufacturing production line for something like once super-common CRT TVs would require a major investment that so far nobody is willing to front.

Meanwhile LCD and OLED technology have hit some serious technological dead-ends, while potential non-organic LED alternatives such as microLED have trouble scaling down to practical pixel densities and yields.

There’s a chance that Sony and others can open some drawers with old ‘thin CRT’ plans, dust off some prototypes and work through the remaining R&D issues with SED and FED for potentially a pittance of what alternative, brand-new technologies like MicroLED or quantum dot displays would cost.

Will it happen? Maybe not. It’s quite possible that we’ll still be trying to fix OLED and LCDs for the next decade and beyond, while waxing nostalgically about how much more beautiful the past was, and the future could have been, if only we hadn’t bothered with those goshdarn twisting liquid crystals.

Unpopular opinion, pixel art looks better without gaussian blur

Modern pixel art is very different than retro game artwork that was designed for low resolution and CRT. Modern pixel art is designed to be displayed on digital displays (grid).

CRT blur is not Gaussian blur. It’s a horizontal blur. It mixes the colors of adjacent pixels. This allows artists to create the illusion of more colors than that were supported by the palette.

CRT blur depends on the type of mask you’re using and the resolution you’re trying to display. It has the characteristics of a gaussian blur by how the electron beam spreads, and the outcome depends on the arrangement of sub-pixels (i.e. delta mask, aperture mask, etc.)

Artists back in the CRT era wished they didn’t have to deal with the inherent blurriness of a CRT, and tried to work around it.

That’s not necessarily the truth. It’s possible they may have been dismayed by the low display resolutions of the screens as well as of the hardware of the computers and consoles of the time. However, they used this blurring and natural looking “anti aliasing” effect to their advantage, creating a more smooth looking image. These games were NOT designed around pixel art looking pixellated. This was especially apparent in the mid 90s just after the NES era, at which point games had just enough on screen pixels that they could just about make them look smoother and detailed with the strengths and limitations of the CRT technology. Play Donkey Kong Country, Sonic 1, Duke Nukem 3D or even just look at screen shots from 90s gaming magazines to get an idea of what I’m talking about. Modern indie games use the pixel art effect to their advantage but they were designed to look pixellated.

– Some dude who usually doesn’t comment

Retro game consoles used composite video output which caused further blur as well as color artifacting.

EULOGY FOR A TECHNOLOGY

Oh brilliant CRT, where are you now?

Rejected by the masses who formerly visually adored your presence, now,

Lowered to an oblivion where even recycle conscious second-hand stores refuse to show you

Television – radio for the eyes. The theater in the home. Amazing.

Hidden in odd basements and garages, you may dream of the

Science and manufacturing and systems developed for your heyday. Tubes and solid state.

Vacuum technology, mass production, high voltage controls, magnetics, degaussing circuits and

Systems of broadcast networks, antennas, transmission standards.

The dust collects on your terminals corroding from disuse. Rusted cans of “tuner renew” long gone.

Mice gnaw on your maze of wiring and logical connections. Old capacitors, slowly bleeding their gasses

Your heart warms with past highlights… public adoration; live broadcasts; 50’s black and white;

Westerns and sitcoms; First color sets; remote control; news and follies; visual moon walks; pong.

I have to see this! Adjust the horizontal hold ’till it stops jumping!

I miss the fading bright spot in the middle as you powered down;

The crackling static as I moved my hand against the glass;

The antenna rotator control which made signals come from different directions and other cities;

The craftsmanship that enveloped you in finished wood cabinets… that now, still can

Occasionally be seen by the streets… on collection day.

I salute this now -homeless- technology, and give thanks to those pioneers that made you happen.

May your funeral pyre burn bright with the spurious flames of all the service photofacts volumes.

I vaguely remember hearing about something similar to SEDs/FEDs called ‘microvalves’, but I think these used tiny discrete chambers with a blob of phosphor at one end, an electron emitter at the other and a grid on the side; it was strictly one per pixel. This is clearly different to what’s being described here. Was this ever a real thing or have I hallucinated them?

Mitsubishi had the DiamondVision Jumbotron displays in the ’80s which was effectively a matrix of single pixel CRTs. There were other similar large display technologies, but for single panel hollow state displays I’ve only heard of plasma, FED, and SED.

It was intended to be a real thing.” Modern displays evolved differently due to manufacturing costs and performance improvements in existing technologies.

I think it’s still pretty easy to get a CRT. Just contact your local real estate agents. Often they are presented with a house (usually after someone’s died) that is full of stuff and almost inevitably there’s a big CRT in the garage.

Also I see them on my local Buy Nothing group occasionally.

In places like the US, maybe. In many European countries CRTs have gone nearly extinct, also since there’s little space for hording.

Such as classic VGA monitors from the DOS gaming era (14″ or 15″, fully analog).

The only types you’ll still likely find readily are valuable vintage types, like those b/w TV sets built into wooden furniture.

Or Commodore monitors (say 1084), iconic 1970s portable TVs..

The kind that qualifies more as a prop for a film set than an actual device for daily use.

Expect to pay around 120 USD (100€) for a little black/white TV over here. Or more.

i agree, but as a counter-anecdote, i got rid of my CRT a few months ago. and when i posted it to facebook marketplace, i had a multiple people asking for it within a couple hours. i’m not sure anyone that wants one is going without a CRT, but there’s definitely a lot more demand than i was expecting. to some extent, it seems that the still-working units are already spoken for

I have a CRT in my garage and the last time I checked it worked. I think it is a Samsung, but would have to look to be sure. I will do that tomorrow if I remember.

i got offered several recently, i turned them down. problem with crt monitors is they take up so much desk space. if you are using it in your workshop it eats space for tools. i require significant magnification in my old age to solder small. a usb scope does the trick just fine. if my last lcd screen didnt burn its cc tubes. il just get me a small portable display or some such that is easy to move around.

i used to have some nice crt monitors, they were all dark and huge, for a mediocre screen size. i dont see much point, as the only people who really care are 8-bit gamers. let those people collect the free screens. more screen in less space is a lot more valuable to me.

I don’t think LCDs could have driven CRTs from the TV market without the late, unlamented Plasma displays. In some ways, they were the worst of both worlds: you had a fixed pixel pitch, and you had phosphors that burnt in worse than any CRT, and they doubled as heaters. On the other hand, the contrast was great, the colours were vibrant, and you could hang it on the wall.

I still have an old plasma display that somehow avoided burn in, and after 10 years of cheap LCD monitors and laptops, the colours look amazing at 720p.

Plasma TVs also caused a lot of TVI/RFI, which annoyed radio amateurs.

Plasma TVs were also considered unsuitable to video games due to burn in.

That being said, the orange gas plasma screens of 1980s laptops were really cool.

Unlike early LCD screens, they were good readable most of time.

A common “prank” on plasma TV owners was to leave a porn film on pause on the television when they left for holidays. That prank was foiled by later plasma televisions that would automatically switch to a screen saver after so many hours on idle. LCD screens also get burn-in after a while, but it’s reversible.

Which is another thing that we’ve forgotten – CRT screensavers – flying windows and starfields, or random pipes, or a dude on a desert island doing random stuff.

I still have a 50 inch LG Plasma TV hanging on my bedroom wall that we use fairly regularly from 2007. Can’t believe how much I spent on that TV, but on full brightness (which its never really in its life spent any real time on) still looks crisp as it did the day it was unboxed. This particular model has a very subtle kind of “pixel” shifting it does about every ten seconds to prevent any image retention. Still haven’t found a TV that replicated black nearly as good. Just a shame it weighs 75 pounds!

I’m in the same boat. Bought 2 50-inch plasma tvs. Both are still going strong from daily use. IMO, I like it better than CRT. I also have an OLED & that is holding up well so far, but the refresh rate doesn’t beat any of the plasma TV’s. Either bring back plasma or work on SED or FED. I would try them.

I also have a 50” plasma, I bought it second hand 15 years ago and it is still going strong. No burn in and looks terrific at 720p. Heavy and warm… kinda like me.

About camera modules. For along time, CCD camera modules were used as a substitute for tube cameras.

Eventhough picture quality of tubes still was nicer.

Even the first HD cam corders of early 2000s didn’t use CMOS, yet but still CCD.

A common resolution was 1440×1080 for CCD cam corders, rather than 1920×1080.

Monocrome b/w camera modules (CCD) were considered high-tech by early 90s, still.

For the first time, affordable little cameras could be installed in toys and models (model trains etc).

They were about the size of a big stamp or a little matchbox.

Color cameras followed soon, but had an “fuzzy” image still.

Camera tubes were susceptible to overdrive with bright scenery, resulting in weird blooming effects and lines. CCD chips were less susceptible to this, partially because they were less sensitive. Television studios had to spray paint or tape over reflective objects to deal with it.

For an example, watch the reflections off of Roger’s guitar in this video:

https://youtu.be/31YZz0BoSuo?list=RD31YZz0BoSuo&t=61

Thanks for the image of Roger making a fish face while whistling… I could have gone my whole life without seeing that.

There’s another video of him teaching a Canadian audience how to “whistle like an African”, where he has them pinching their lower lip and suck air, and then comments, “I’m glad to see there are so many suckers here tonight.”

Thank you so much for the YouTube clip. Just goes to show that enjoyment of artists is not so much as the quality of the media but it’s the quality of the performer!

With all the retro hype these days, I’m surprised no one has attempted to market an OLED display specifically for mimicking CRT. OLED should have the refresh rate necessary to recreate the raster scan of CRT from an analog video source so you could still play duck hunt or yoshis safari. They could even bond the oled panel to thick curved glass or acrylic for that hazy gaussian goodness people like for some reason.

NES runs at 60 Hz. It had a 256×240 resolution.

You’d need a display of about 3.7Mhz to simulate a crt for light guns.

There’s nothing hazy or Gaussian about a good CRT display that’s adjusted properly. If there were, your small text made out of 1-pixel-thick lines wouldn’t be perfectly sharp and clear on this CRT PC monitor I’ve been using daily for the past 19 years. Glass has better optical characteristics than the plastic that digital displays use. Anyone who remembers CRT displays as being “hazy” / “fuzzy” / “blurry” / whatever, either has a bad memory or only ever saw CRTs that were out of adjustment and/or displaying a poor quality video signal.

Also, there is currently no way to mimic a CRT display. OLED may have comparable picture characteristics in terms of contast / black level / viewing angles, but it’s still a digital display, which means it has a fixed resolution (i.e., a fixed number of pixels) and display lag. The best digital displays in this regard have about 1 ms of display lag, which is literally about a million times more display lag than a CRT has. Display lag has nothing to do with refresh rate. Digital displays have a lot of it because they have to process a digital video signal, whereas a CRT uses an analog video signal which drives the electron gun(s) directly, only limited by the speed of electricity through the chassis, which takes maybe a nanosecond.

Articles like this make me wonder what CRTs people had back then, did they all only have Trinitrons?

Perfect Black levels? Every CRT I ever had would give off a clear glow when sitting on a black screen. Far worse than modern standard LCDs not to even mention displays with dynamic LED backlights.

Didn’t need to worry about subpixel rendering? That’s because they were all blurry. People today all complain about AA with special note towards one that make the screen blurry. That was basically all CRTs that I experienced back then. I remember being shocked when I fired up Smash Bros. Melee in an emulator and saw a ton of detail in the start screen background that I had never seen before on my CRTs and console.

At the end of it, yes, trinitrons were pretty much the choice if you could make it. If only for the fact that they were flat instead of fish-bowl shaped.

Not just the glow, the tube itself was more dark gray than black. Perfect black levels is bullshit indeed. But I’ve come to expect this kind of content from certain editors.

I’d have to drag out the CRT TV monitor sitting in the garage to validate this, but my initial thought is that the dynamic range between an (ideally) unlit phosphor and adjacent lit ones is greater than is possible between a completely shuttered LCD pixel and adjacent non-shuttered pixels. Thus, our brain codes the “black” portions of a CRT as darker than the “black” portions of a LCD. This assumes the contrast and brightness of the CRT device is adjusted appropriately. My experience was that you can wash those blacks right out with a twist of a couple of knobs.

LCDs can’t drop the minimum amount of light they emit to zero, so they turn up contrast by passing more light. By adjusting the contrast setting, you’re actually turning the backlight up and both the black level and the white level go up, but because of the eye’s non-linear response to absolute brightness, this looks like the contrast is improving – as long as the ambient light levels match.

CRTs cannot turn the maximum level of light up too much because the phosphors get saturated and the pixels start to bloom, and the whole tube starts to glow by driving it too hard. By adjusting the contrast setting on a CRT you’re changing the signal amplitude that modulates how low the electron beam current can go, which can reach all the way off. If the tube is out of adjustment, the signal may be biased such that the electron beam is never quite off, and you get a persistent glow, or the black or white level gets clipped, etc.

It’s fiddly business to get it right, the right amount of bias and the adjustments themselves keep changing as the tube and the other components age, and all the controls are not accessible to the user – you’d have to go turning pots inside the covers to re-calibrate – so most CRTs are/were more or less slightly out of adjustment all the time.

In absolute terms, modern LCDs reached mid-to-high quality CRT screens at 1000:1 to 1500:1 real contrast ratio about 10 years ago. They are now really as good as most people would get with CRTs back in the days, while top-end CRTs would get up to 2000:1 – but few had the money for those.

The only problem that remains is the backlight leaking through, which makes the LCD appear to have worse contrast in darker ambient environments – and people like to watch movies and play games with the room lights dimmed down, for one thing because it stops you seeing reflections of the room from the panel.

To put things into perspective, black printer ink on white copy paper has a contrast ratio of about 20:1. The best inks on glossy paper can achieve about 100:1 in practical terms. Yet this appears to have a good contrast ratio because the paper does not emit light of itself – it’s actually very dim, so your eye responds to it differently than to a monitor which emits quite a lot of light. The picture on a printed page is operating on a different portion of your retina’s response curve than the monitor.

Coatings like vantablack could achieve 1000:1 but it can’t be applied using regular printing techniques.

The surface of the tube was gray, but that’s besides the point. That only mattered with ambient light reflecting back off the tube, which went away when you turned the lights down. With less ambient light, you could turn down the brightness, which reduced the glow to a minimum.

CRTs improved in contrast with less ambient light, because a pixel turned off did not emit light – unlike LCDs which leak light and appear more washed out with less ambient light. That’s why LCDs look good on the shop floor with bright display lights, and terrible in your living room with the lights turned down for watching a movie.

Though newer CRTs were programmed to count the hours and gradually increase the electron gun current to compensate for fading phosphors, which made them glow more and more with age.

I tried turning the lights down, but can’t find the Sun’s switch. And sure you can reduce brightness if you think it’ll only affect glow. It also affects, uh, what was it called… oh yeah, BRIGHTNESS. You like watching dark screens I guess. If you’re going that route, what do think will happen when you reduce brightness on an LCD?

You also contradict yourself. You admit there’s glow, and yet you say “a pixel turned off did not emit light”. So which one is it?

I am in the retrocomputing scene (MSX computers), I use CRT’s daily, and I don’t have a false sense of nostalgia for them. I like using them, just as I like using a data recorder to load games, but I’m not going to pretend a tape is better than an SSD.

Yes. CRTs were rather dim, so people used a thing called curtains to stop sunlight getting into the computer room. If you don’t have those available, then LCDs are a better choice for you.

The problem with adjusting brightness on an LCD is that the controls don’t do what you expect them to do. With a CRT, the brightness setting adjusts the electron gun current, which changes brightness, whereas in LCDs the contrast setting typically changes the backlight brightness and the brightness setting adjusts how much the LCD crystals are allowed to open or close, which adjusts both contrast AND brightness at the same time.

If you actually want to improve the black levels on an LCD to gain more apparent contrast without turning up the ambient lights, you have to turn the contrast way down and adjust the brightness to comfort. Turning the contrast up and brightness down results in a washed out display that glows in the dark – though in some better LCD screens the controls are the right way around again; your mileage may vary.

Both. A properly adjusted CRT does not glow significantly. That is an artifact of trying to drive the tube too hard to gain more maximum brightness against too much ambient light (or to “fix” an old faded tube).

And how many of them have been made in the last 5-10 years? How many hours on the tubes you have? When was the last time you saw a brand new CRT monitor?

Of course they’re going to look terrible after 20-40 years of age and more than 50,000 hours of use.

So in ambient light I don’t notice any glow from an LCD, and yet you claim CRTs are better because they require complete darkness to use them. Which is not only silly, but also false. Fact is a CRT is an analog device, and it suffers from noise in the input signal, the signal processing, and the output signal. Noise causes the tube to glow no matter how you adjust it. And the noise floor gets more visible as you decrease signal strength. When you adjust the tube for a dark room, the SNR goes down and so does the signal-to-glow ratio. That’s how analog signals work, and nostalgia doesn’t change the laws of physics.

I don’t recognise your brightness/contrast-swap from any LCD I ever owned. Even the cheapest mainstream one I ever had, a Samsung 206BW, had the controls the right way around 20 years ago. The cheapest LCD I can still check is my HP Mini 210 netbook (remember those?) and as expected, changing the brightness changes the backlight strength. So again, reducing brightness on an LCD reduces light leakage just as it does on a CRT. Maybe the fact that you got the controls the wrong way around explains why your LCD experience is so bad.

Your last claim also makes no sense. When there’s a lot of ambient light, you can turn the brightness up more without seeing the glow anyway. So properly adjusting it is not the issue. Unless you mean a tube adjusted for sunlight glows in a dark room. Sure. And sure, an LCD adjusted for sunlight glows in a dark room too. But an LCD adjusted for low ambient light doesn’t glow in a dark room either.

At least you acknowledged that the GREY tube surface was a serious problem unless you enjoyed living like a vampire.

I’m old enough to have had several computer CRTs, some of which were above average. I have no nostalgia for them, or their failure rates (at least for those made from the mid-90s to early 00s.) And while the cheap business class LCDs of today are making a good run at being as terrible as the cheap business class CRTs of yesteryear – at least you don’t get a splitting headache an hour into your day from the crap refresh rate.

Though, to be fair, the crap CRTs from back then at least had some semblance of decent color and contrast compared to the abysmal performance you get from some cheap modern LCDs. On the high end, some of the CRTs had good color and contrast, but you’d want some leaky shades so your room wasn’t so bright that you noticed the dark pixels glowing. Whereas with a modern OLED display, that’s not an issue.

Oh, I see you added another reply about age and hours. First of all, I’m old enough to have lived through the CRT days as an adult. My opinions are not solely based on how CRT’s look now. Second, to get “more than 50,000 hours of use” on a 30 year old screen I’d have to use the screen 4.6 hours a day every day! If you have to exaggerate that much to make a point, you don’t have a point.

I did not make that claim.

It’s not an exaggeration. Many monitors remain on for much longer than 4.6 hours a day. Just look at any office where people leave their monitors powered on for the whole time, and forget to turn them off. Even I have ~22000 hours on my monitor and it’s what, 7 year old perhaps?

yeah that’s all i was thinking about throughout this thing…i’ve owned a lot of garbage CRTs, and a few garbage LCDs as well. but my last CRT was from the climax of it, a 2001 Dell with near-Trinitron quality (IMO), and it was great…and today every LCD i have seems pretty good too. especially in phone displays, i’ve had an LCD that was so great, and an OLED that was so awful that side-by-side i preferred the LCD!

all the technologies are a lot better at their relatively more mature end, to the extent where the trade offs between them just don’t seem that big compared to the march of progress

I think that’s generally true, but there’s sometimes also a drop in quality torwards the very end.

Just think of 3,5″ floppy disks and drives.

Torwards the end, when they nolonger generated good profits,

the quality decreased a lot and everything was made as cheaply as possible.

Keyboards are similar, I think. Manufacturers like Cherry produced “cheap” keyboards in the 1980s and 1990 that would now be considered more on the high-end side.

Or let’s take vintage radios like the Yaesu FT-101 ham transceiver.

It’s built in a steel chassis. That kind of build quality is unthinkable nowadays.

From an electrical side, too. The amount if shielding and the low noise floor and sensitivity of that receiver circuit (triple super, I think).

Black level was an internal adjustment on classic color TVs, part of the servicing instructions. I suspect later CRTs omitted that adjustment.

It it depend, I think. Domestic TVs in average household weren’t Trinitron, maybe.

You know, the kind of ordinary TVs used by kids in their bedroom to play the NES or C64..

But broadcast monitors used in TV studios had a higher chance of being Trinitron.

But on other hand, the Commodore 1701/1702 were often used in broadcast sector, too.

And they weren’t Trinitron/by Sony, but rather a Commodore version of the JVC C-1455 (a PVM).

https://crtdatabase.com/crts/commodore/commodore-1702a

Anyway, I guess it’s best compared to HiFi equipment.

Some people had an ordinary radio and cassette recorder or a radiogram at home, while some had a HiFi Stereo tower in living room.

People who loved quality thus had a TV using Trinitron or a competing technology.

But either way, from my experience, I think that there’s a simple rule of thumb.

– If you have low-quality content (source material) then a low-end equipment matches it well.

– If you have high-end content (source material) then a high-end equipment matches it well.

(That’s because low-end content may have unwanted noise and artifacts that suddenly become visible on a high-end equipment only.

Just think of headphones here. If you have a cheap music cassette, a cheap walkman and a cheap pair of walkman headphones then the music may sound “okay” to you.

If you’re listen to same music cassette on a HiFi deck or with a pair of HiFi headphones, the experience might be really bad.)

The second one has an exception to the rule, but merely to a given point.

If you down-sample the high-quality material in best possible way,

then it might match the lower-end equipment in best possible way and use it to its fullest.

If it doesn’t, however, then the down-scaling process will introduce noise or artifacts.

It’s like downscaling a picture by either even vs uneven zoom factors with/without interpolation vs simply taking a picture at native resolution.

In some situation, the image might been cleaner if it was taken with a digital camera

that operated at the lower resolution natively rather than an HD camera who’s pictures had been downscaled in post-processing.

That’s why DVD was such a success, by the way.

It took advantage of the (theoretical) full resolution of an SD television set.

Even owners of vintage TVs from early-mid 20th century saw an improvement over VHS.

The image quality of a well mastered DVD was on eye level with broadcast video tapes or Laserdisc.

Same time, owners of a VGA or multisync monitor (CRT) could watch same DVD in progressive scan, if the DVD player supported it.

Or if an SCART/RGB to VGA converter box was used, they were able to get an ED like quality, too.

My apologies for by bad English, it’s not my native language.

Yep, no kidding. This article is viewing CRTs with some serious nostalgia. A typical CRT display/television was small, blurry, low-resolution and had a bunch of artifacts (barrel distortion, color fringing, and whatnot). Indeed there were some high-end devices around – especially towards the end of CRT era – like Trinitrons and those were not too bad. But ordinary ones? I’ll take a modern cheap LCD any day.

And if we’re thinking of high-end CRTs, let’s compare to high-end LCDs. Those have vastly better color reproduction, viewing angles, etc. compared to cheap ones (to get a color shift, you’ll have to look almost edge-on, and at that point you’ll have no idea what’s on the screen anyhow)

Trinitrons were a horror to adjust, if for some reason one of the glued corrective magnets fell off its spot on the CRT without leaving a witness mark of where it recided. Sony Trinitrons had the contrast, Panasonic had the sharpness. Panasonics also were significantly easier to adjust.

Same here, this article feels like it’s comparing the pinnacle of CRT technology with mid-range TFT junk from 20 years ago.

I’ve had numerous TFT/IPS monitors and TV’s and all of them have been better than any of the many CRT’s I’ve owned or sat in front of throughout my life.

And weirdly the one thing I do notice when walking past the huge TV displays at my local Currys is that in order to get data down the wire fast enough, many of these big 4K TV’s and the boxes behind them are actually doing fairly lossy compression – watch any scene with rippling water or explosions and see the compression artefacts go wild. But even that is hard to notice unless you’re looking for it, and they’re getting better and cheaper all the time.

Yes. Most of the drawbacks griped about don’t even apply to OLED, and the newer IPS fixes most except black levels. And yeah, the real scourge is compression artifacts. The content we’re feeding 4k sets does not have 4k detail, even if it says it’s in “4k”

I see people pining for CRTs, but they’re not going to replace the 70″ hanging on your wall.

And my pet peevee: motion prediction artifacts. Watch a nature documentary where the camera is panning over a huge detailed scenery – the image rolls smoothly, then jerks every 1-2 seconds while the details vanish to blur and re-appear an instant later.

Having a higher data rate doesn’t help – it just makes the stuttering happen every 4-5 seconds instead.

CRTs have never had OLED black levels. I have a Sony PVM in my office which I use for light gun games; the blacks on that are, at best, dark grey.

Same here. I never miss the CRT’s, what i have seen all glowed, and were blurry. TV’s, were all blurry.

“Articles like this make me wonder what CRTs people had back then, did they all only have Trinitrons?”

It doesn’t matter what CRT TVs people had back then, because it’s irrelevant to the fact that CRT display technology can easily implement perfect black levels, because it’s an emissive display technology. To create black you simply don’t fire any electrons at the phosphors in the areas meant to be black.

“Perfect Black levels? Every CRT I ever had would give off a clear glow when sitting on a black screen.”

That’s because every CRT you had was cheap, i.e., with crude or nonexistent black level clamping circuitry. They would average out the brightness, so if the entire screen was supposed to be black the brightness would increase and it would look gray. But even those cheap CRTs looked great with typical well-lit scenes in movies or TV shows; blacks looked truly black.

“Far worse than modern standard LCDs not to even mention displays with dynamic LED backlights.”

Yeah, right. Those don’t have perfect blacks in any type of scene, whereas even cheap CRT TVs had perfect blacks in well-lit scenes.

Also, most any CRT PC monitor has perfect blacks even on a blank/black screen, if it’s adjusted right, even ones from the late 1980s when VGA monitors first came out.

“Didn’t need to worry about subpixel rendering? That’s because they were all blurry.”

No, they absolutely weren’t. If they were blurry, the small text on this page, which is made from 1-pixel-wide lines, wouldn’t look perfectly clear and sharp on the CRT PC monitor I’m using right now, and have been using daily for the past 19 years. I have about 30 CRT displays, including arcade monitors, PC monitors, and TVs. The oldest one was made in 1980 and the newest one was made in 2005. Absolutely none of them are “blurry;” not even close. If they were blurry I would hate CRTs, because blurry images bother my eyes.

I’m fine not having a pair of 80lb monitors on my desk.

Huh? 36kg? You mean something like a big 19″ SVGA CRT monitor?

The green monitors we’ve used to use for C64 or IBM PC were very lightweight.

The Commodore 1084 color monitor popular among C64 and Amiga 500 users did weight 11kg. That’s 24lb to you.

The historic IBM 5151 monochrome monitor did weight 5,5kg (12lb).

There are LCD/TFT monitors heavier than that.

A Sony GDM-FW900 (22.1″ 2304 x 1440) was 41.7 kg.

Any larger would have been a television.

I had a Mitsubishi 36 inch 800×600 monitor that used as a TV after getting it for free. I forget whether it weighed 300 pounds (well over 100kg) and drew 400 watts, or if the numbers were the other way around.

Most of them. If one says CRT, it mostly means a 17″ one. Heavy.

An old Sony VGA 30″ display clocked in around 36 kg, as i recall. Barely could carry it alone from one room to another. Sadly the image was soft, refresh rate wasnt that good either. Was of course an old device by the time i had my grubby hands on it, but still, a new lg 17″ crt display was far better.

Also, an 80cm deep desk to the wall with a CRT monitor on it can have a small keyboard in front of it. Right now I can have my keyboard far more forward on my desk, such that my (not so fat) belly almost touches the desk edge, and my upper arms are straight down and my lower horizontal, i.e. the recommended ergonomical position, and I still can have some stuff on my desk in front of the keyboard.

When you have a 480p signal on a rented TV, any discussion of resolution sort of goes out the window.

But does this mean I’ll finally be able to use Lenslok copy protection again – https://en.wikipedia.org/wiki/Lenslok

I really want to get around to building one of these some day for a retro style CNC control – https://www.youtube.com/watch?v=bdo3djJrw9o

Ah, Lenslok. Thanks for the madeleine moment. I remember excitedly coming home as a small child from a family day-trip to London, my brand new copy of Elite for the ZX Spectrum clutched in my hands. Unfolding that small red lens assembly and then cursing all & sundry as I struggled to get it to work.

I think I did in the end, only to discover that I had as much natural aptitude for Elite as I do for deep-sea pearl diving, i.e. none whatsoever. I could never once manage to dock successfully, which as you can imagine made games very short :)

They do, and it depends on what sort of content you wanted to display. The phosphor mask is still a discrete grid and it interacts with the image you’re trying to display. The optimum resolution depends on whether you want to display perfect pixels, or display the information the pixels represent (a single pixel is not the same thing as an image “dot”)

See: https://en.wikipedia.org/wiki/Kell_factor

If you set the resolution to match the dot pitch, or an even fraction of that, you get a “perfect” image, Any higher and you get moire, any lower and you get beat artifacts, assuming all else lines up right. That is in effect the native resolution of a CRT.

I had read somewhere that moire didn’t exist on aperture grille masks and that was a feature of dotmask. idk if that is true.

When you try to fit two “grids” together, no matter what their patterns, you always get something like a moire effect unless they align perfectly. Moire as I understand comes from the beat frequency of higher density patterns on a lower density grid, a form of “aliasing”, whereas the artifacts discussed in the Wikipedia article come from lower density patterns on a higher density grid near the “Nyquist limit” where the phases of the two patterns almost align.

It’s the same thing in audio. Even though CD audio has a theoretical Nyquist limit at 22.1 kHz, without reconstruction filtering and dithering etc. you get quantization artifacts above 16-17 kHz sounds because they’re close enough to the limit that the sum and difference of the two frequencies turns up as audible frequency amplitude modulation, or in the case of television, weird wavy artifacts on repeating patterns.

The mask pattern can work as a dithering filter, so for example a delta mask can spread the error around and make it less visible, trading contrast errors for color errors, but it’s still there.

The death of CRTs was a blessing because those monsters ate power like there was no tomorrow.

Want that CRT look? There’s always Cathode-Retro

Not enough, need the full NTSC experience? We have an NTSC-CRT encode/decode simulator

Too much and just want a retro terminal window? There’s cool-retro-term.

Seriously, there’s no reason to bring back actual CRTs.

I miss black/white monitors and CRT oscillographs, though.

There’s something magical about a grid-free monitor that uses an energy beam. 💙

I also love the fact that they’re not using RGB sub pixels, but have a smooth image.

Playing 3D Monster Maze for ZX81 on a real b/w TV has an eerie atmosphere that can’t be replicated so easily by an LCD setup or an emulator.

I miss mono green or orange CRT’s, it would be nice to see that done with LCD’s for some applications but RGB panels are so cheap and mass produced now it’s unlikely to be worth it.

Hi! I think that large e-ink displays would be really nice to have, too.

They aren’t orange or green, but if they had levels of grey they could simulate the soft image of a b/w television set.

That would be nice for fans of ZX81/Timex 1000 or the ZX Spectrum fans.

Or fans of an Atari ST (thinking of SM124 monitor, in hi-res mode).

The downside of e-ink is slow response time, action games wouldn’t be as nice as on an CRT.

Same ways, OLED screens might be usable to simulate old TTL monitors which had just one intensity level (or two, with intensity line).

Something like a Commodore PET or an Hercules monitor..

Cool-retro-term is, uh, cool! But, the standard settings are too extreme.

Real mono CRTs didn’t flicker all time or have their picture rolling.

That’s as if someone watched Matrix movie and thought that was real.

Rather contrary, an IBM 5151 monitor or a DEC VT-100 terminal had a clean, sharp and -stable- image.

Anyway, cool-retro-terminal can be adjusted to match the real thing, so it’s not a big deal.

The problem rather is, that modern users may not remember how good the real thing looked..

That’s why it’s so important to document the original technology in great detail. Pictures, footage, schematics and specs..

Power, with a hernia in every package.

If you go back to the 60s maybe. In the 70s, CRT TVs started becoming ever more energy efficient. First, the color tube behemoths running 300+W for pretty much any picture size. Then the early transistor models – I have a 1973 Grundig color 14 inch that uses 110W. They quickly got more efficient and by ’78 we’re seeing 14 inch color TVs consuming just 50W. In the 90s, a decent 14 inch TV ran around 35W.

Mid 2000s LCD monitors had about the same wattage as early 80s CRT TVs with the same screen size. Tested on 19 inch, a 1982 TV and an early 2000s TV both ran at around 55 Watts – only the old CRT TV dipped down to around 48W with an all-black picture while the LCD did not. Also the LCD wasn’t able to display an all-black picture, more like all-dark-gray. And since the LCD was indeed more compact, that also meant that generating the same amount of heat made it pretty toasty while the ancient TV merely got warm.

Only when LED backlit panels became the norm, they finally got really energy efficient (I remember a 24 inch widescreen monitor in 2014 that used 18W. A CRT this wattage would have been 9 inches maximum – or around 16 inch black&white – that’s based on early 80s B&W CRT consumption – I had a 1981 made 11 inch B&W that consumed only 12W)

The drawback of knowing this is being surrounded by stacks of ancient e-waste CRT TVs. But they’re working, I like them, I measured them.

Had a mid-2000s LG L1919S 19″ LCD monitor with around 30W consumption. Didn’t heat up.

idk, in the computer world, back then, the screens ate power, in todays situation, the cpu+gpu eats more power combined in a lot of cases.

But the revolution won’t be televised:

https://en.wikipedia.org/wiki/The_Revolution_Will_Not_Be_Televised

You even wonder with the current level of censorship if the Revolution won’t be on the internet either.

the only LCD vs CRT difference that means anything to me at this point is the wake-from-sleep time. my last CRT would wake almost immediately, and would come up to full brightness in 5-10 seconds. but the picture was already there (dimly) as it was warming up. that was understandable because it physically needed to warm up the components!

but this 32″ budget LCD TV i have takes a full 10 seconds to display anything at all (during which time it shows a splash screen). and the “only” thing it has to do is boot up the software. the panel and backlight each “warm up” in much less than a second.

I have wondered why an LCD TV has to take so long to boot, but have never looked into it. I have several non-smart 50″ units that take 8-10 seconds to start displaying anything. Having had to repair the backlights in all of them, I know, as one would expect, that the LEDs light instantly when power is applied. But, it sure does feel like waiting for a CRT to warm up.

I mean, these things don’t connect to a network. I can see some discovery time for CEC type devices on HDMI, but that could surely happen after the picture was up.

If anybody wants to try to spy on it and see what it’s doing during bootup, I can donate a unit to the cause. My wife would appreciate it.

Many modern TVs run android so that needs to boot on a fresh power up. Once booted they will wake up from “off” in a couple seconds, at least mine do.

I speculate that the “solution” in the “smart TV” is prolly powered by the computational equivalent of Arduino Nano. Maybe Mega, but surely not anything better than that. (SARCASM! SARCASM! I do know that the likes of Firestick used ARM A53 at least, which is quite a powerhouse to start with, video/sound decoding embedded, etc).

And what about monitors, which wake up in 2s?

There is still a lot of promise in Thin Film ELectroluminescent Displays but more research needs to be done. They are much lower power than other light-emitting displays. I’ve seen a red green TFEL display refreshed at 300Hz without any ghosting.

You mean like the “Indiglo” backlights that were a fad for a while? Those seem to have a lifetime measured in weeks or months.

No, that was thick film EL.

CRT simulator shader filters in emulators are getting pretty good, wondering when someone will make a decent faux-CRT TV with a 4K pixel density 4:3 panel (preferably OLED) that applies the CRT-filter effect to the video inputs with minimal lag. Powerful FPGAs are cheap enough now that this should be possible…

I love my 14″ PVM but that’s not gonna last forever…

I hope that there will be remaining a small market for monochrome CRTs, at very least.

Or that the last factories won’t close/won’t be demolished.

Mono CRTs are not very complicated to make from a mechanical point of view,

it’s mainly a glass tube with a phosphor screen.

They also need no screen mask with a perfect alignment,

which was the trickiest part of color CRTs.

Mono CRTs are great because they’re very fast (no lag) and need no complicated electronics, no microcontroller.

From a practical point of view, they could be useful in very humid or otherwise hostile environments.

Or be used for instrument panels, just like electro mechanical ones.

Also, in their high-res versions (SVGA etc) they would make for excellent CAD or medical monitors, providing both a soft and detailed picture.

Comparable to e-ink panels, just less complicated.

I mean, let’s imagine what could be done with nowadays technology..

Modern mono CRTs could be very flat and lightweight, too! With low radiation levels.

Since there’s just one electron gun, there are no convergence issues, either.

I just want to play my light-gun games again :(

I’m actually using a crt for many modern games. My monitor can do 1600x1200p@100hz and I can combine gpu supersampling with it to look pretty good. I can also do 1920ix1440i@160hz if I am wanting more frames and I can actually use temporal antiasliasing to get rid of the interlacing shimmer along contrasting edges.

I think we’ll get flat CRT displays about the same time that NASA gets their nanoscale vacuum channel electronics.

Not necessarily that one requires the other, just that a big government agency using something vaguely reminiscent will make it look good to the marketing execs.

Gonna pass on the 2nd “golden age” of visible refresh rates that made me nauseous… Even so called fast 120hz make you want to gouge your eyes out faster then the overly bright blue of LCDs…

Make 4k plasma screens with quantum dot filters and 240hz refresh rates!

Display size is the deciding factor in perceiving flicker. 100 hz on 14″ was flicker free, not so on a 19″ crt.

CRTs may excel in some metrics but distinctly suffer from looking a whole lot worse than even old LCDs. Anyone who spent time looking at CRTs back in the days knows this.

CRT isn’t CRT, though. There are so many different versions.

Some LCD/TFT panels also had (and still have) their issues.

Interpolation and “noise” (wobbly pixels) did exist, too. Or low pixel density.

Sometimes, insects and mould infect the LCD layers, too.

Then the CCFL backlight failed too early on some models.

In principle, there are two kind of color CRTs (not counting various screen mask types).

The household, consumer grade CRTs and the professional grade CRTs.

The cheap CRTs have a dot pitch of 0,4mm or bigger and are great for watching TV or playing pixelated video games such as the NES.

The low physical resolution of the CRT makes the RGB pixels blur together, which softens the image and make it look more natural.

By contrast, the professional CRTs have a low dot pitch of 0,2mm and a precise screen mask, too.

Each computer pixel is meant to be clearly visible (including the sub pixels at close view).

SVGA monitors from the mid-90s onwards fall into this category, for example. Or earlier CAD monitors from the 80s.

PVMs used in TV studios are similarily sharp/precise by design,

though they’re meant for TV video material rather.

They have analog inputs, such as CVBS, RGB, YPbPr and so on.

That’s why it makes little sense for retro gamers to upgrade from an TFT/LCD monitor to a late 90s CRT monitor, by the way.

An SVGA monitor, even if CRT based, doesn’t provide any better image than a flat screen monitor.

Both draw the raw pixels very accurate, which wasn’t intended by game developers of late 80s and early 90s.

Games being developed for Amiga or MCGA/VGA PCs assumed a blurry monitor, such as Commodore 1084S or IBM PS/2 Model 8512..

They have a dot pitch of 0,42mm and 0,41mm, respectively.

The Commodore 1701/1702 monitor even had a dot pitch of 0,64mm!

No wonder the C64 games looked so good on it! All the pixelation was being removed through “analog” filtering.

The monitor type also was used in broadcast,

so cartoons of the 80s and 90s probably had been test viewed on such a monitor, as well.

Another reason to keep a CRT: VHS tapes and hand drawn animation syndicated for television look as intended on an SD resolution CRT.

Anyway, I hope that sums things up a bit.

The type of screen mask surely matters, too, but the effect of the dot pitch is often overseen.

Because without knowing, users of today could draw the false conclusion

that there’s no real difference between a CRT and LCD/TFT, except that a CRT looks more fuzzy.

But it’s not like that, old video games like NES and Sega Genesis did indeed look great on a period-correct household TV set or ordinary video monitor.

And it wasn’t because of scan lines or a noisy RF connection (well, not entirely).

It was because the games had been made for the SD monitors of their time.

There’s nothing rose coloured about this, it’s a reasonable conclusion.

PS: Arcade cabinets were special, by the way. They had built-in high-quality RGB monitors to begin with (no composite video).

Here, scan lines and a pixel-perfect image did matter!

Also, arcade boards were high-end and operated at a higher graphics fidelity than, say, an humble NES.

That’s a detail that users of scalers seem to miss when they try to improve the picture quality.

8/16-Bit video game consoles and the arcade cabs have different needs, in short.

Best CRT s ever made was manufactured by Philips Mullard in UK renown for making world class Audio Valves still highly sought after for Hifi Quality no one else makes better Valves . However the Quality of modern TV and Computer screens is a lot higher than years ago so surprised there is a interest in old CRT Technology. We used to have 3 people to pick up a 30 inch Monitor for Audio Visual Jobs they were pretty heavy and cumbersome to move not good for your back. So glad Technology has improved providing excellent pictures on most good brands . Having said this some makes of TV are so thin now they crack as you take out the box from brand new advise people have insurance as it’s bought too thin for my liking, Ross

I didn’t really verify this but read one big push toward LCDs was due to the lower power consumption. But, with the LCD the power use goes up with the square of the size, while with the CRT you needed somewhat more power to the deflection electromagnets (that steer the electron beam) so the power consumption increase was more gradual, to the point that the crossover (where CRTs were back to losing less power) was something like 21 inches,.

“LC displays”

Dear lord!

Can’t we just add a grammatical rule that says that you are always required to use the full acronym or initialism, and furthermore, spell out last word of it?

LCD displays

LED diodes

ATM machines

LASER radiation

RADAR ranging

…

I’m sure there are exceptions, but let’s ignore those. It’ll be fine.

This article is repeating common misconception. CRT and plasma do not have perfect blacks. Being self-luminous is not equivalent of perfect black. In both CRT and plasma screen it’s impossible to completely turn off one pixel, they will always have some “leakage” because of the nature of the type of the screen. In plasma screens it’s something you can see as grain on blacks while CRT simply has some phantom luminosity. Everyone who ever had CRT should remember that when you turn it on, the size of the usable screen will turn a little brighter.

While there are some methods to reduce that and top end screens were better at that, it’s nowhere near being perfect black. Moreover, they are somewhat comparable to high end LCD right now.

In my head “OLED displays are a new technology and the cutting edge of tvs.”

The reality “OLED technology is really advanced but was developed in 2007 nearly 20 years ago.”

This… This hurts me…

Nobody wants CRTs back. This is the same group of people who think retro tech is cool when in reality its just clunky and inefficient.

So we couldn’t get by with LCDs paired with some form of XRAY source to mimic a CRT display?

Tich, tich.

Everyone a retro gangster till go to move the CRT to dust your desk.

You can buy cheap engine hoist on aliexpress and use that to lift CRT if you need to clean your desk.

I see I’m a little late to the game since the aspect of X-ray exposure has already been broached. I’ll add my vote that I happily retired my Trinitron CRT desktop display with its two tension wire shadow artifacts almost 30 years ago and my corneas say, “Thanks.” I never looked back (pun alert).

Keep in mind that it wasn’t just the high voltage rectifier vacuum tube which generated X-rays but the CRT tube as well. (Yes I know, later TVs and monitors used solid state triplers once semiconductor diodes improved).

If you really want a nice color CRT, you need to go back to the ones made in the late 1950s and early 1960s by RCA for the particular red phosphor they used. The electronics driving the tube were less capable but the color rendition was a better match to the CCITT (ITU-T) CIE 1931 color space.

One of acquaintances is a radiologist and he uses high grade LCD monitors. If there were ever a place that gray scale matters, radiology is probably it.

Hit on the head. The desert reds in 1960s TV westerns never looked better than on an RCA of that era!

Ohh damn i totally had forgotten about wire shadows, when i read your text i got a mental image of it, and recalled how annoying it was, back when one actually could sit close enough to the display to see the issue.

I have three CRT televisions sitting around as decorations, but they do work. I occasionally run them in the winter for extra space heat. I still use my Dell P1130 Trinitron as a 4th monitor!

I feel like I’m housing refugees, sheltering them from a highly unpopular and misunderstood phenomena.

https://en.wikipedia.org/wiki/Shadow_mask

Not having a resolution, lol.

800×600, 1280×1024, yeah :D (And glowed with black screens also)

I never miss CRT’s.

Do the FED/SED type systems emit a high-pitch whine like CRTs? I hate that noise.

Wait, turns out I’m to old to hear it anymore.