Late last Tuesday night, after watching F1: The Movie at the Steve Jobs Theater, I was driving back from dropping Federico off at his hotel when I got a text:

Can you pick me up?

It was from my son Finn, who had spent the evening nearby and was stalking me in Find My. Of course, I swung by and picked him up, and we headed back to our hotel in Cupertino.

On the way, Finn filled me in on a new class in Apple’s Speech framework called SpeechAnalyzer and its SpeechTranscriber module. Both the class and module are part of Apple’s OS betas that were released to developers last week at WWDC. My ears perked up immediately when he told me that he’d tested SpeechAnalyzer and SpeechTranscriber and was impressed with how fast and accurate they were.

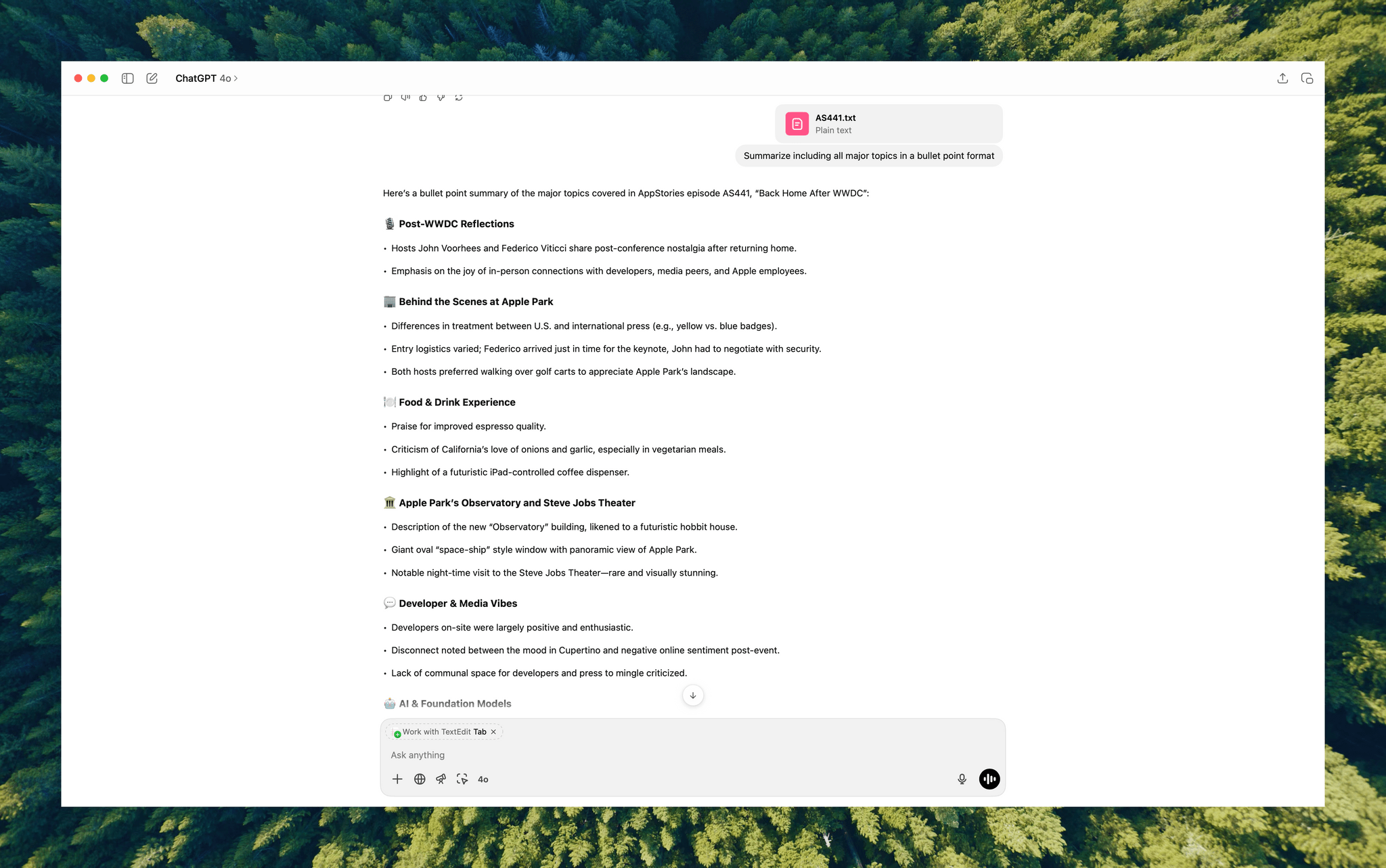

It’s still early days for these technologies, but I’m here to tell you that their speed alone is a game changer for anyone who uses voice transcription to create text from lectures, podcasts, YouTube videos, and more. That’s something I do multiple times every week for AppStories, NPC, and Unwind, generating transcripts that I upload to YouTube because the site’s built-in transcription isn’t very good.

What’s frustrated me with other tools is how slow they are. Most are built on Whisper, OpenAI’s open source speech-to-text model, which was released in 2022. It’s cheap at under a penny per one million tokens, but isn’t fast, which is frustrating when you’re in the final steps of a YouTube workflow.

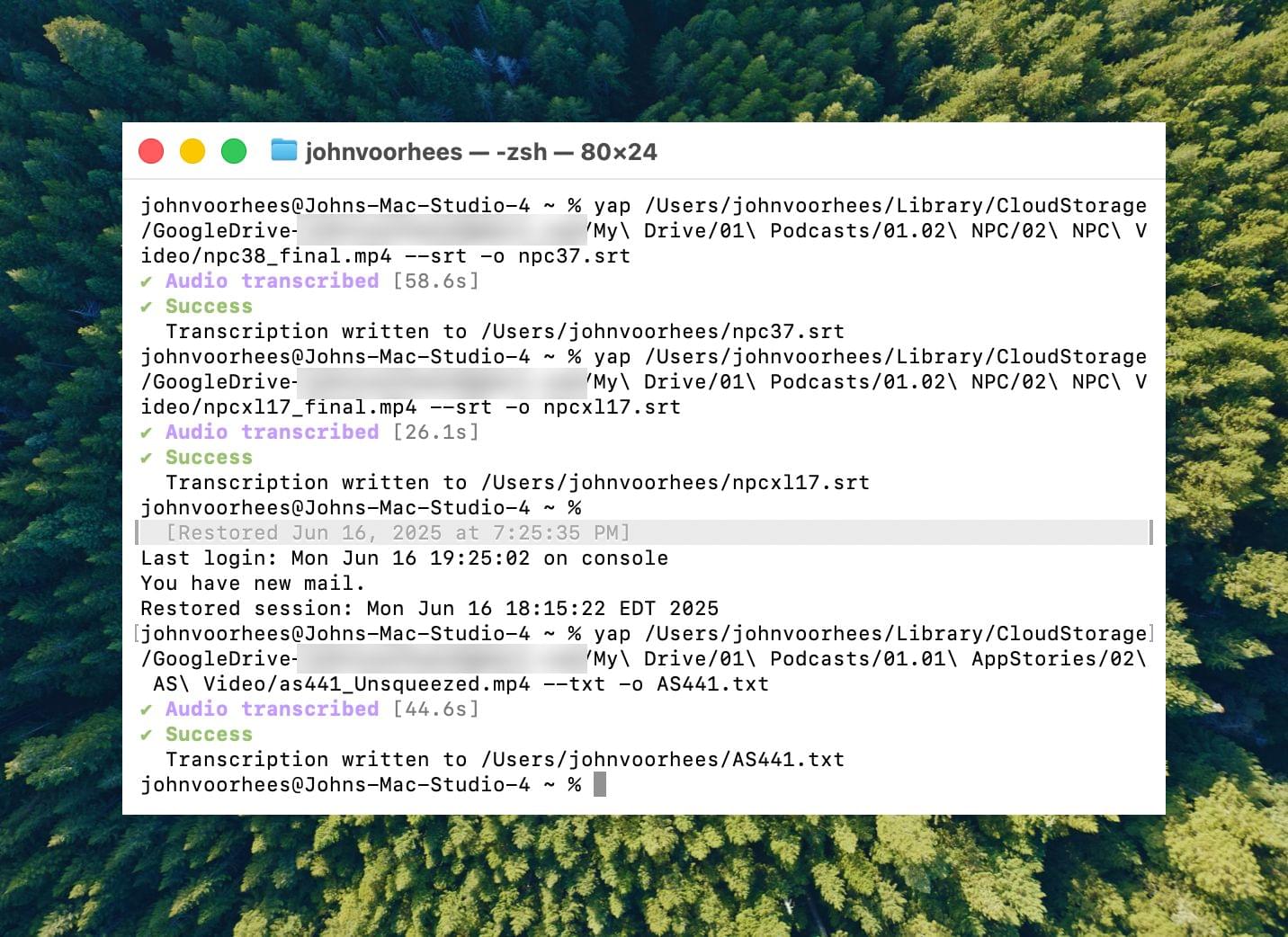

I asked Finn what it would take to build a command line tool to transcribe video and audio files with SpeechAnalyzer and SpeechTranscriber. He figured it would only take about 10 minutes, and he wasn’t far off. In the end, it took me longer to get around to installing macOS Tahoe after WWDC than it took Finn to build Yap, a simple command line utility that takes audio and video files as input and outputs SRT- and TXT-formatted transcripts.

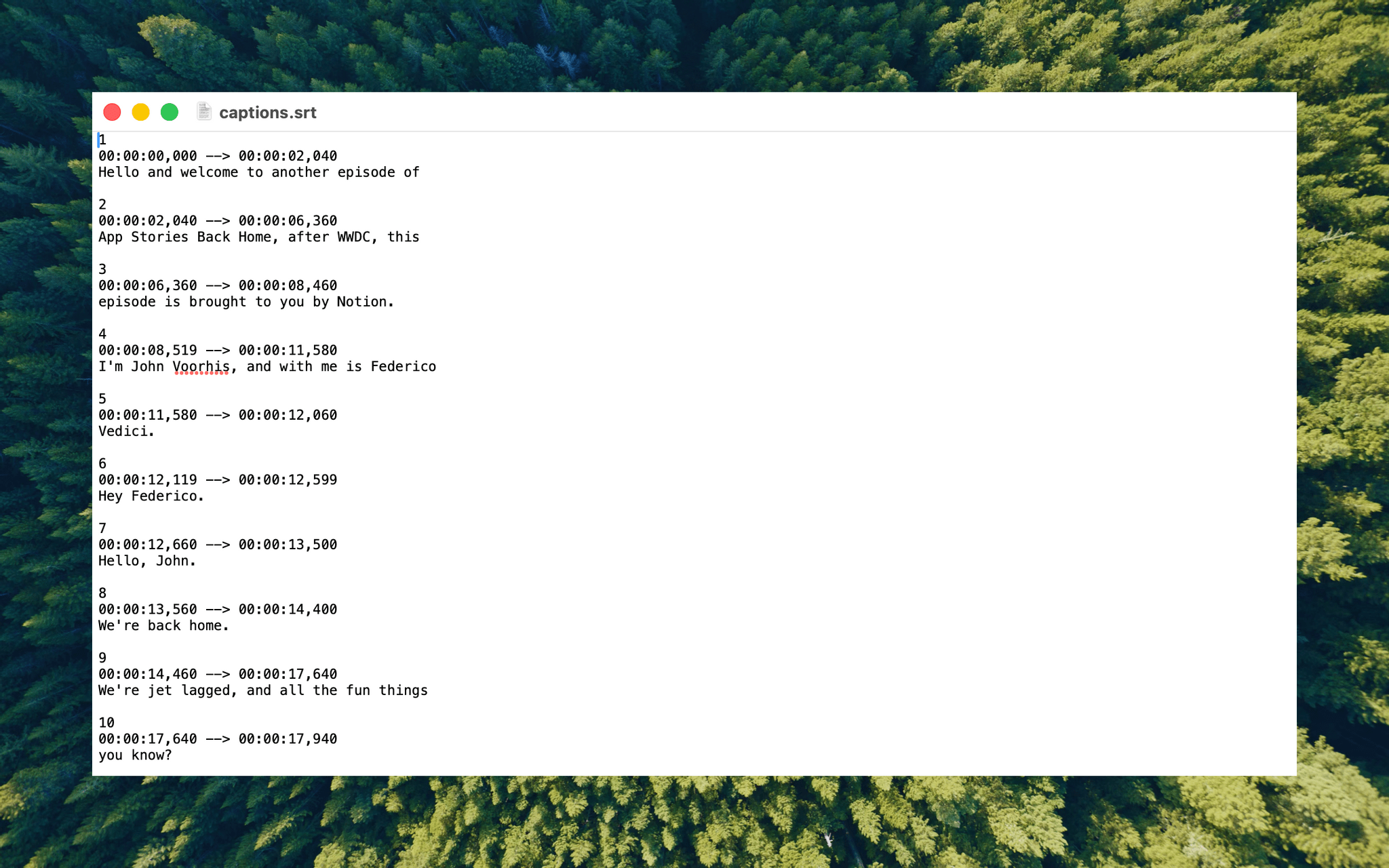

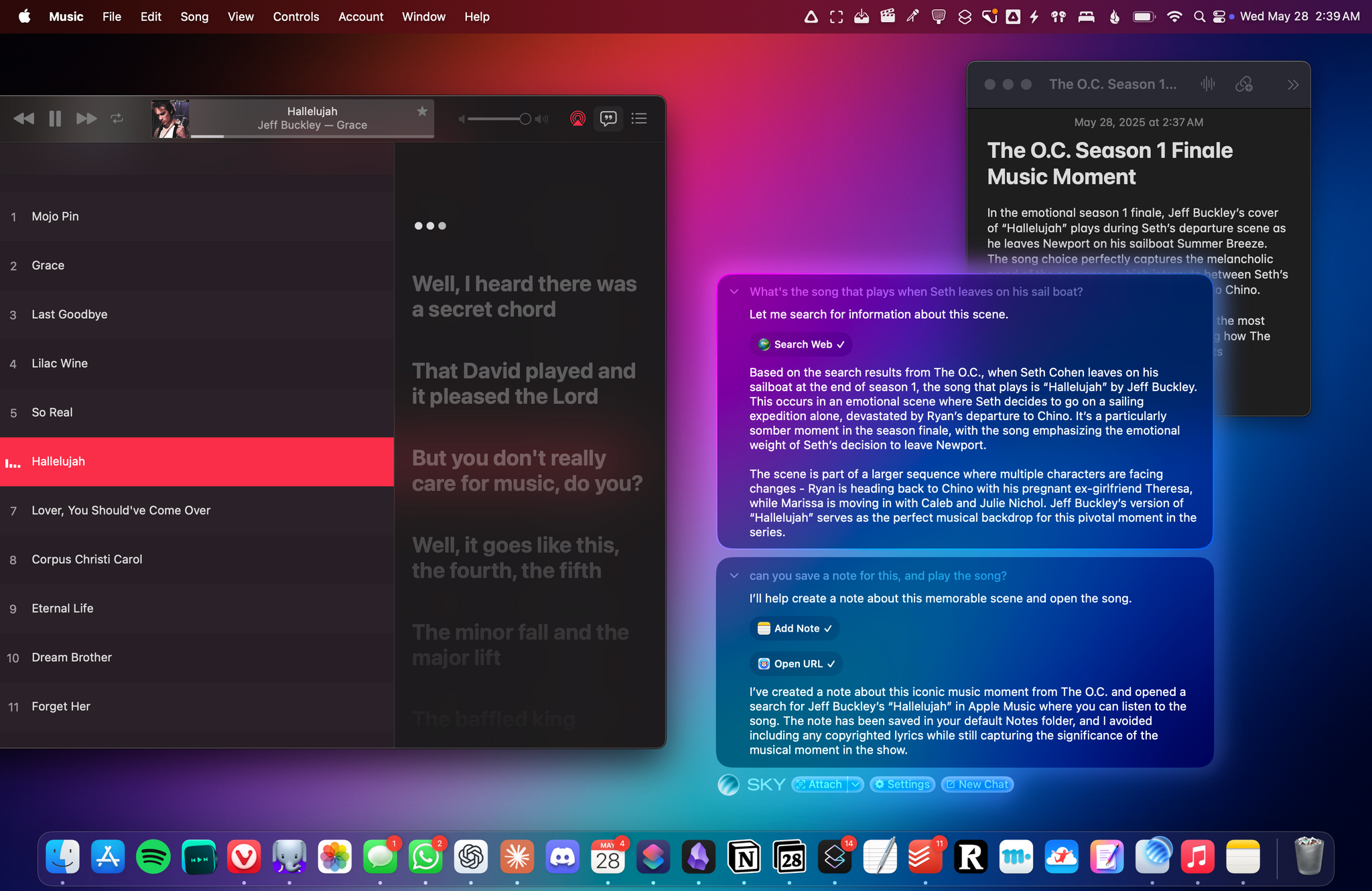

Yesterday, I finally took the Tahoe plunge and immediately installed Yap. I grabbed the 7GB 4K video version of AppStories episode 441, which is about 34 minutes long, and ran it through Yap. It took just 45 seconds to generate an SRT file. Here’s Yap ripping through nearly 20% of an episode of NPC in 10 seconds:

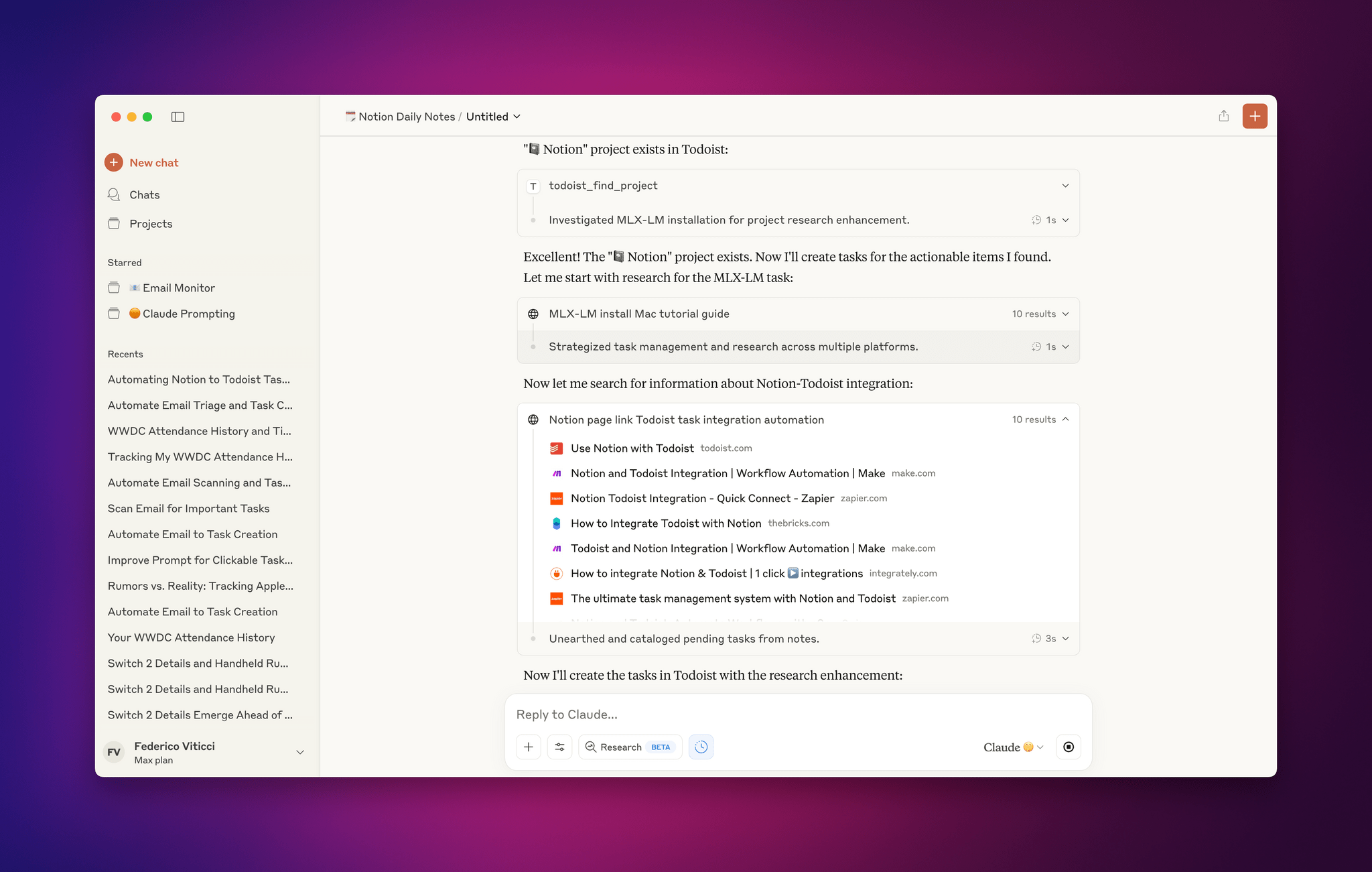

Next, I ran the same file through VidCap and MacWhisper, using its V2 Large and V3 Turbo models. Here’s how each app and model did:

| App |

Transcripiton Time |

|---|

| Yap |

0:45 |

| MacWhisper (Large V3 Turbo) |

1:41 |

| VidCap |

1:55 |

| MacWhisper (Large V2) |

3:55 |

All three transcription workflows had similar trouble with last names and words like “AppStories,” which LLMs tend to separate into two words instead of camel casing. That’s easily fixed by running a set of find and replace rules, although I’d love to feed those corrections back into the model itself for future transcriptions.

What stood out above all else was Yap’s speed. By harnessing SpeechAnalyzer and SpeechTranscriber on-device, the command line tool tore through the 7GB video file a full 2.2× faster than MacWhisper’s Large V3 Turbo model, with no noticeable difference in transcription quality.

At first blush, the difference between 0:45 and 1:41 may seem insignificant, and it arguably is, but those are the results for just one 34-minute video. Extrapolate that to running Yap against the hours of Apple Developer videos released on YouTube with the help of yt-dlp, and suddenly, you’re talking about a significant amount of time. Like all automation, picking up a 2.2× speed gain one video or audio clip at a time, multiple times each week, adds up quickly.

Whether you’re producing video for YouTube and need subtitles, generating transcripts to summarize lectures at school, or doing something else, SpeechAnalyzer and SpeechTranscriber – available across the iPhone, iPad, Mac, and Vision Pro – mark a significant leap forward in transcription speed without compromising on quality. I fully expect this combination to replace Whisper as the default transcription model for transcription apps on Apple platforms.

To test Apple’s new model, install the macOS Tahoe beta, which currently requires an Apple developer account, and then install Yap from its GitHub page.