The concept of a 3D scanner can seem rather simple in theory: simply point a camera at the physical object you wish to scan in, rotate around the object to capture all angles and stitch it together into a 3D model along with textures created from the same photos. This photogrammetry application is definitely viable, but also limited in the sense that you’re relying on inferring three-dimensional parameters from a set of 2D images and rely on suitable lighting.

To get more detailed depth information from a scene you’d need to perform direct measurements, which can be done physically or through e.g. time-of-flight (ToF) measurements. Since contact-free ways of measurements tend to be often preferred, ToF makes a lot of sense, but comes with the disadvantage of measuring of only a single spot at a time. When the target is actively moving, you can fall back on photogrammetry or use an approach called structured-light (SL) scanning.

SL is what consumer electronics like the Microsoft Kinect popularized, using the combination of a visible and near-infrared (NIR) camera to record a pattern projected onto the subject, which is similar to how e.g. face-based login systems like Apple’s Face ID work. Considering how often Kinects have been used for generic purpose 3D scanners, this raises many questions regarding today’s crop of consumer 3D scanners, such as whether they’re all just basically Kinect-clones.

The Successful Kinect Failure

Although Microsoft’s Kinect flopped as a gaming accessory despite an initially successful run for the 2010 version released alongside the XBox 360, it does provide us with a good look at what it looks like when trying to make real-time 3D scanning work for the consumer market. The choice of SL-based scanning with the original Kinect was the obvious choice, as it was a mature technology that was also capable of providing real-time tracking of where a player’s body parts are relative in space.

Hardware-wise, the Kinect features a color camera, an infrared laser projector and a monochrome camera capable of capturing the scene including the projected IR pattern. The simple process of adding a known visual element to a scene allows a subsequent algorithm to derive fairly precise shape information based on where the pattern can be seen and how it was distorted. As this can all be derived from a single image frame, with the color camera providing any color information, the limiting factor then becomes the processing speed of this visual data.

After the relatively successful original Kinect for the XBox 360, the XBox One saw the introduction of a refreshed Kinect, which kept the same rough layout and functioning, but used much upgraded hardware, including triple NIR laser projectors, as can be seen in the iFixit teardown of one of these units.

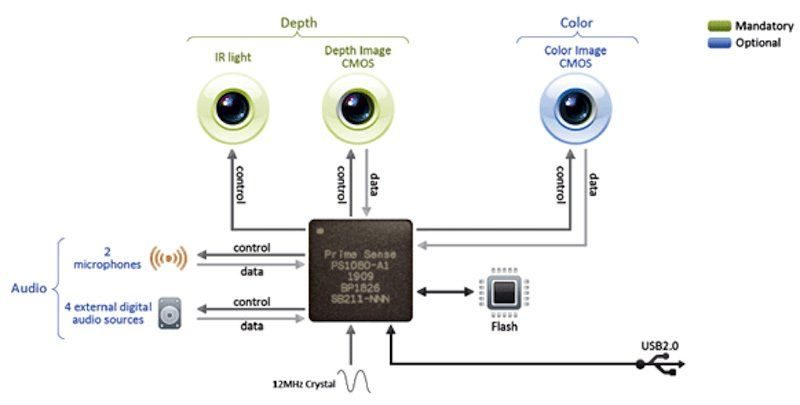

In both cases much of the processing is performed in the control IC inside the Kinect, which in the case of the original Kinect was made by PrimeSense and for the XBox One version a Microsoft-branded chip presumably manufactured by ST Microelectronics.

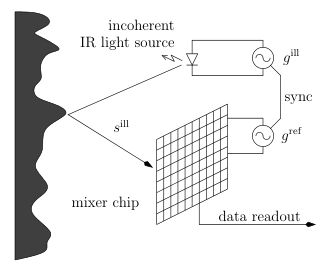

The NIR pattern projected by the PrimeSense system consists of a static, pseudorandom dot pattern that is projected onto the scene and captured as part of the scene by the NIR-sensitive monochrome camera. Since the system knows the pattern that it projects and its divergence in space, it can use this as part of a stereo triangulation algorithm applied to both. The calculated changes to the expected pattern thus create a depth map which can subsequently be used for limb and finger tracking for use with video games.

Here it’s interesting to note that for the second generation of the Kinect, Microsoft switched from SL to ToF, with both approaches compared in this 2015 paper by Hamed Sarbolandi et al. as published in Computer Vision and Image Understanding.

Perhaps the biggest difference between the SL and ToF versions of the Kinect is that the former can suffer quite significantly from occlusion, with up to 20% of the projected pattern obscured versus up to 5% occlusion for ToF. The ToF version of the Kinect has much better low-light performance as well. Thus, as long as you can scan a scene quickly enough with the ToF sensor configuration, it should theoretically perform better.

Instead of the singular scanning beam as you might expect with the ToF approach, The 2013 Kinect for XBox One and subsequent Kinect hardware use Continuous Wave (CW) Intensity Modulation, which effectively blasts the scene with NIR light that’s both periodic and intensity modulated, thus illuminating the NIR CMOS sensor with the resulting effect from the scene pretty much continuously.

Both the SL and ToF approach used here suffer negatively when there’s significant ambient background light, which requires the use of bandpass filters. Similarly, semi-transparent and scattering media also pose a significant challenge for both approaches. Finally, there is motion blur, with the Kinect SL approach having the benefit of only requiring a single image, whereas the ToF version requires multiple captures and is thus more likely to suffer from motion blur if capturing at the same rate.

What the comparison by Sarbolandi et al. makes clear is that at least in the comparison between 2010-era consumer-level SL hardware and 2013-era ToF hardware there are wins and losses on both sides, making it hard to pick a favorite. Of note is that the monochrome NIR cameras in both Kinects are roughly the same resolution, with the ToF depth sensor even slightly lower at 512 x 424 versus the 640 x 480 of the original SL Kinect.

Kinect Modelling Afterlife

Over the years the proprietary Kinect hardware has been dissected to figure out how to use them for purposes other than making the playing of XBox video games use more energy than fondling a hand-held controller. A recent project by [Stoppi] (in German, see below English-language video) is a good example of one that uses an original Kinect with the official Microsoft SDK and drivers along with the Skanect software to create 3D models.

This approach is reminiscent of the photogrammetry method, but provides a depth map for each angle around the scene being scanned, which helps immensely when later turning separate snapshots into a coherent 3D model.

In this particular project a turning table is made using an Arduino board and a stepper motor, which allows for precise control over how much the object that is being scanned rotates between snapshots. This control feature is then combined with the scanning software – here Skanect – to create the 3D model along with textures created from the Kinect’s RGB camera.

Here it should be noted that Skanect has recently been phased out, and was replaced with an Apple mobile app, but you can still find official download links from Structure for now. This is unfortunately a recurring problem with relying on commercial options, whether free or not, as Kinect hardware begins to age out of the market.

Fortunately we can fallback on libfreenect for the original SL Kinect and lifreenect2 for the ToF Kinect. These are userspace drivers that provide effectively full support for all features on these devices. Unfortunately, these projects haven’t seen significant activity over the past years, with the OpenKinect domain name lapsing as well, so before long we may have to resort to purchasing off-the-shelf hardware again, rather than hacking Kinects.

On which note, how different are those commercial consumer-oriented 3D scanners from Kinects, exactly?

Commercial Scanners

It should probably not come as a massive surprise that the 3D scanners that you can can purchase for average consumer-levels of money are highly reminiscent of the Kinect. If we ogle the approximately $350 Creality CR-Scan Ferret Pro, for example, we’d be excused for thinking at first glance that someone stuck a tiny Kinect on top of a stick.

When we look at the user manual for this particular 3D scanner, however, we can see that it’s got one more lens than a Kinect. This is because it uses two NIR cameras for stereoscopic imaging, while keeping the same NIR projector and single RGB camera that we are used seeing on the Kinect. A similar 30 FPS capture rate is claimed as for the Kinect, with a 1080p resolution for the RGB camera and ‘up to 0.1 mm’ resolution within its working distance of 150 – 700 mm.

The fundamental technology has of course not changed from the Kinect days, so we’re likely looking at ToF-based depth sensors for these commercial offerings. Improvements will be found in the number of NIR cameras used to get more depth information, higher-resolution NIR and RGB sensors, along with improvements to the algorithms that derive the depth map. Exact details here are of course scarce barring someone tearing one of these units down for a detailed analysis. Unlike the Kinect, modern-day 3D scanners are much more niche and less generalized. This makes them far less attractive to hack than cheap-ish devices which flooded the market alongside ubiquitous XBox consoles with all of Microsoft’s mass-production muscle behind it.

When looking at the demise of the Kinect in this way, it is somewhat sad to see that the most accessible and affordable 3D scanner option available to both scientists and hobbyists is rapidly becoming a lost memory, with currently available commercial options not quite hitting the same buttons – or price point – and open source options apparently falling back to the excitingly mediocre option of RGB photogrammetry.

Featured image: still from “Point Cloud Test6” by [Simon].

great article and description of the tech. Next time I 3D scan with the Kinect I’ll try it in a dark room.

The quality of the data is so bad that you’re better off using a 8 year old phone

The point is of course that you get a depth map, rather than just having the RGB images to apply photogrammetry on. Sure, the Kinect’s depth map is fairly low-resolution, but it can make certain scans significantly easier and more precise. Photogrammetry relies on visual marks to determine depth, which aren’t always as obvious or even present.

Having a 1080p CMOS sensor for the NIR camera(s) is of course very useful, and that’s where those newer commercial offerings can be nice.

An iPhone 11 delivers better depth data than the Kinect

and?

and cost a hell of alot more too

Only if it has a depth scanner (a handful do), which makes it essentially the same system, or MEMS lidar, which some more expensive ones have, which could be better but are typically configured in a way that doesn’t generate better results than this.

Does anyone proof read this before they post online this reads like a child wrote it.

Thank you for your complaint. Pro tip: try proofreading your complaint before you submit it.

Thank you for clapping back to this. Article is very fascinating. Brings me back to the good ole days before Kinect discontinued. Much appreciated.

Do the people making these posts ever contribute to the conversation? Do they propose specific changes or write their own articles?

I successfully 3D scanned two people posing together using a repurposed (1st gen) Kinect sensor in order to CNC machine a wooden sculpture. The scan volume was perfect. Granted, the data from the stitching software was pretty noisy, and it took some practice to scan a complete “skin” without holes, but it got the job done. I’d do it again.

I wouldn’t call it worthwhile given the improved model available, but there’s a “simple” hack possible with the 1st gen. It involves use of a motor to provide an offset to the grid projection, effectively doubling the resolution (at half the frame rate of course).

Did you intentionally not mention the company behind the Scanect software? For anyone else that company was Occipital who designed their own structured light device that attached to an iPhone called the ‘Structure Sensor’ it was far smaller that the Kinect, higher resolution and went on to be used in the first consumer bounded tracking XR system. They also came out with a new system when their license ran out since Apple purchased sensor company used by them and by Microsoft. Their new system used IR stereo cameras, plus wide angle B/W camera for scanning and SLAM.

Apple of course used an even smaller structured light sensor in the iPhone X for facial recognition that could also be used for scanning and facial motion capture. It’s disadvantage is it tight FOV and limited range. This was supplemented in a later year with a true LIDAR based TOF sensor on the back of the phone that can be used for depth tracking, scanning or adjunct process for photogrammetry or Gaussian splatting.

The focus of the article is on Kinects, with a look at commercial offerings today. It was not meant to be an exhaustive overview of 3D scanners. I had hoped that this would have been obvious.

Fought with kinects for months, got a Realsense 305, fought with USB driver bugs and power requirements. Gave up when the Intel support said “keep trying different desktops with different windows installs, it will work on one of them”

Now Revopoint seems to have a decent handle on it but naturally closed source so less easy to use for robotics.

It’s pretty unfortunate most of these were immediately purchased and effectively removed from the market once they became useful. Intel’s only “good” implementation was a one shot, overpriced and cancelled, everything else is very DIY. So it’s possible, but a pain.

I’d suggest looking into motion tracking and scanning separately as there are more open source offerings and you can get modern functionality through sensor fusion. Yes, it’s not plug-and-play, but it will work with SLAM/etc. and that’s where we are.

From memory you need the early gen Kinect… Also if your able to layer in using a laser splayed vertically and measure that and it’s refraction you could overlay a hell of alot more points to your meshes :).. I remember seeing someone do it years back.. been looking for my Kinect ever since … I also saw someone do it with a mobile and a laser aswell.. I just use that 3d as can app.. but they new phones are shocking for it.. my old phone has alllll the sensors and no problems.. the newerphones seem to neglect lidar n things if they aren’t a high end phone annoyingly… So my old phone works great for it.. I also change what I dust it with dependant on what it is that helps alot too.. like clay dust or baby powder strong line chalk etc :)… Graphite bad… Very very bad lolol.. I touched this lamp that’s from some sculpture work more then my life’s investments . And didn’t realise it was on me… That took a hell of alot of detail work to clean that frosted glass 😮💨😅

You don’t need a kinect to use a horizontal and/or vertical scanning laser arrangement. There’s nothing explicitly wrong with this method, it’s the oldest one really (used to height map Mars even, just using the Sun instead of a laser), but it does have shortcomings.

The main operational issue is essentially that it’s slow, the design of the kinect is essentially a collection of specific mitigation strategies addressing that. The main data issues are that it’s just as sensitive to light pollution, reflective and refractive surfaces as the kinect (sometimes more), and the large amount of unstructured point cloud data needs significant post-processing (something the kinect tries to resolve per-frame, with pros and cons).

Your surface prep ideas are good, and now you know graphite is bad news (it’s also conductive and reflective btw). Unfortunately the demise of Google’s project Tango essentially handed the market for this to Apple, and that has cause a lot of stagnation in consumer products.

Solutions to the above problems (including in current-gen lidar based scanners) involve sensor fusion similar to how the phone tools work, but typically more accurate, combining both laser depth data with high resolution imagery. E.g. most of them are essentially an improve kinect purpose built for this. It has been found that blue lasers are a bit better at determining reflective surfaces, but some tools also use the image data to corroborate the depth the lasers return for a boost in accuracy.

A good photogrammetry setup will beat any kinect/realsense setup in terms of quality and resolution if done right. 7

It won’t be anywhere near as fast or easy to use though, and a well done approach using SLAM and a bias comparison for flagged SIFT candidate data referenced against incoming depth can be much, much more accurate despite the lower resolution. This is literally what high end commercial scanners with texture output do by the way. It’s as much of a contributor to the jump in accuracy and drop in price of dedicated devices on the market as the availability of cheaper sensors.

I did my Masters thesis in this area. Search for “Voxel octree 3D scanning”. The algorithm I can up with was janky, but at least allowed me to generate watertight 3D meshes from a first gen Kinect. Fun stuff at the time.

And I’ve used it. I remember going over your work at the time, thanks!

We were doing this over a decade ago?